Thursday, May 29. 2014

Radseed: A Kernel Module for Real Randomness

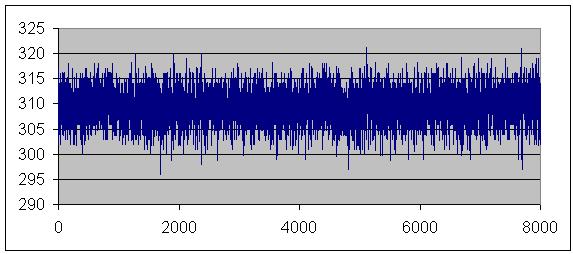

While I was originally planning to write a tiny SNMP daemon for my geiger counter so as to graph trends, I decided instead to write a kernel module exposing the counters. This way, my system can use the interrupt events as a source of true randomness (radioactive decay is, by definition, truly random).

First, a quick introduction to the random number generation bits in Linux: drivers/char/random.c. This module in essence keeps track of two things: a seed value, and a pool of entropic data to mix in. At boot, the seed is set to the previous shutdown value (if available), then mixed with device-constant sources of entropy such as MAC addresses, serial numbers, and so forth. While running, drivers such as keyboards, mice, and disk controllers contribute to seed entropy by calling one of the add_*_randomness() functions defined and exported from random.c. These functions keep track of time deltas between calls, mixing the seed value with new values from the entropy pool between reads.

The random module presents two char devices for userspace to get data: /dev/random and /dev/urandom. On each read(), an SHA1 hash is taken of the current seed value and returned to the user. This result is then mixed with the seed value itself as well as some data from the entropy pool. The difference between them is this: if the entropy pool becomes depleted after successive reads, /dev/random will block until more entropy data is available to mix in and hash, thus ensuring the output is non-deterministic. However, /dev/urandom will continue returning pseudorandom data even without entropy, simply mixing the seed with the resultant SHA1 hash each time around. Determining the seed value and predicting the output would require an inverse of SHA1 (no small feat), but it is nonetheless mathematically possible to do so given enough resources.

As you can see, sources of good entropy are extremely important for reasonably random numbers. While human input sources like the keyboard and mouse are still valid, disk access time data is no longer usable with SSDs due to their highly characterizable behavior. Companies like Intel have implemented hardware randomness and key generation functionality, the closed-source nature of the beast has been cause for skepticism particularly in the post-NSA revelation climate - for instance, OpenSSL still doesn't use the Intel key generation instructions for AES despite significant speed benefits. The point to all of this is that good random seed data is hard to get, and radioactive decay is a great source.

Back to the task subject at hand: Radseed listens for hardware interrupts on the ACK line of the parallel port. When such an event is detected, the events counter is incremented and time of last event (in jiffies) recorded.

jackc@kdev0 ~ $ cat /sys/kernel/radseed/events

41

jackc@kdev0 ~ $ cat /sys/kernel/radseed/last

16316569

If you want to test graphs or whatnot without a geiger counter connected, you can trigger events manually too:

jackc@kdev0 ~ $ cat /sys/kernel/radseed/trigger

1

jackc@kdev0 ~ $ cat /sys/kernel/radseed/events

42

jackc@kdev0 ~ $ cat /sys/kernel/radseed/last

16378144

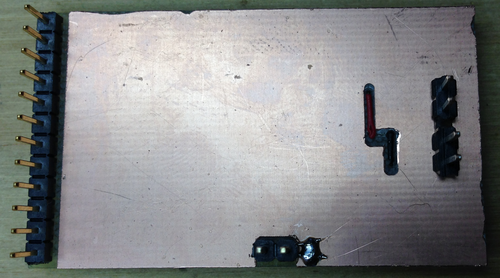

Since very few things even have parallel ports anymore (plus I have a bunch of geiger-muller tubes sitting here), I'm working on a board design for a small USB geiger counter that doesn't need external power and can be plugged into anything for easy external entropy.

Code is on GitHub: jackcarrozzo/radseed.

First, a quick introduction to the random number generation bits in Linux: drivers/char/random.c. This module in essence keeps track of two things: a seed value, and a pool of entropic data to mix in. At boot, the seed is set to the previous shutdown value (if available), then mixed with device-constant sources of entropy such as MAC addresses, serial numbers, and so forth. While running, drivers such as keyboards, mice, and disk controllers contribute to seed entropy by calling one of the add_*_randomness() functions defined and exported from random.c. These functions keep track of time deltas between calls, mixing the seed value with new values from the entropy pool between reads.

The random module presents two char devices for userspace to get data: /dev/random and /dev/urandom. On each read(), an SHA1 hash is taken of the current seed value and returned to the user. This result is then mixed with the seed value itself as well as some data from the entropy pool. The difference between them is this: if the entropy pool becomes depleted after successive reads, /dev/random will block until more entropy data is available to mix in and hash, thus ensuring the output is non-deterministic. However, /dev/urandom will continue returning pseudorandom data even without entropy, simply mixing the seed with the resultant SHA1 hash each time around. Determining the seed value and predicting the output would require an inverse of SHA1 (no small feat), but it is nonetheless mathematically possible to do so given enough resources.

As you can see, sources of good entropy are extremely important for reasonably random numbers. While human input sources like the keyboard and mouse are still valid, disk access time data is no longer usable with SSDs due to their highly characterizable behavior. Companies like Intel have implemented hardware randomness and key generation functionality, the closed-source nature of the beast has been cause for skepticism particularly in the post-NSA revelation climate - for instance, OpenSSL still doesn't use the Intel key generation instructions for AES despite significant speed benefits. The point to all of this is that good random seed data is hard to get, and radioactive decay is a great source.

Back to the task subject at hand: Radseed listens for hardware interrupts on the ACK line of the parallel port. When such an event is detected, the events counter is incremented and time of last event (in jiffies) recorded.

jackc@kdev0 ~ $ cat /sys/kernel/radseed/events

41

jackc@kdev0 ~ $ cat /sys/kernel/radseed/last

16316569

If you want to test graphs or whatnot without a geiger counter connected, you can trigger events manually too:

jackc@kdev0 ~ $ cat /sys/kernel/radseed/trigger

1

jackc@kdev0 ~ $ cat /sys/kernel/radseed/events

42

jackc@kdev0 ~ $ cat /sys/kernel/radseed/last

16378144

Since very few things even have parallel ports anymore (plus I have a bunch of geiger-muller tubes sitting here), I'm working on a board design for a small USB geiger counter that doesn't need external power and can be plugged into anything for easy external entropy.

Code is on GitHub: jackcarrozzo/radseed.

Wednesday, May 14. 2014

Interfacing Cirrus Logic Audio ADCs

I've been considering ideas for audio gear of various sorts over the past few months, and decided a good starting place would be a solid ADC interface from which I could prototype concepts. Lots of companies make audio converters, but I settled on two models from Cirrus Logic: the CS5361 and CS5340. They are, respectively, balanced and unbalanced two-channel 24-bit 192 khz high dynamic range delta-sigma oversampling devices.

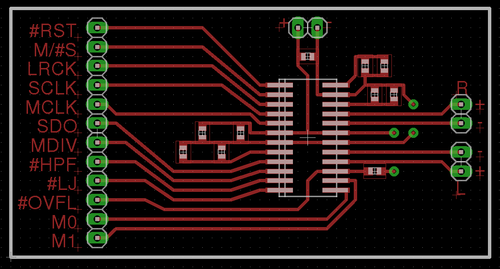

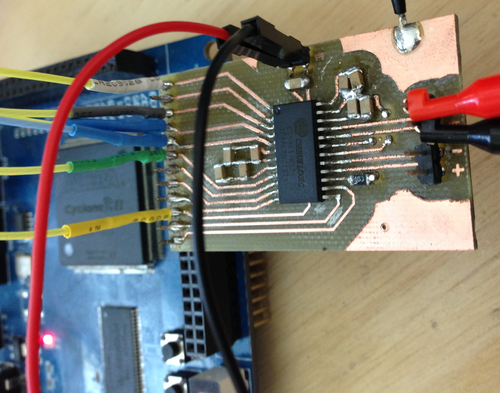

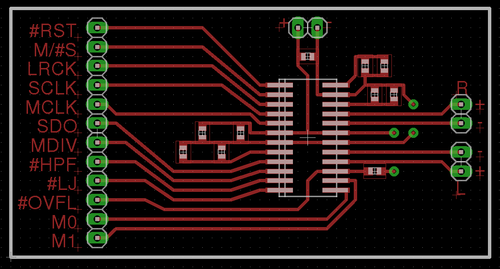

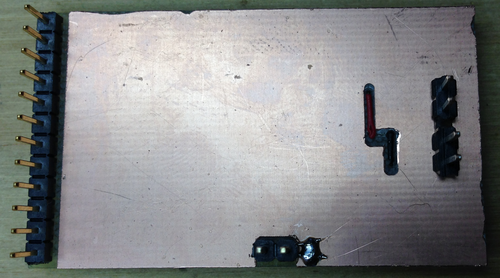

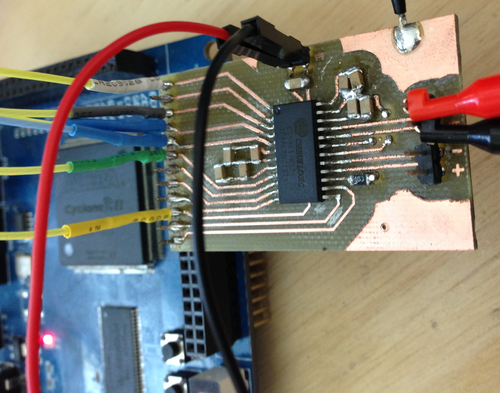

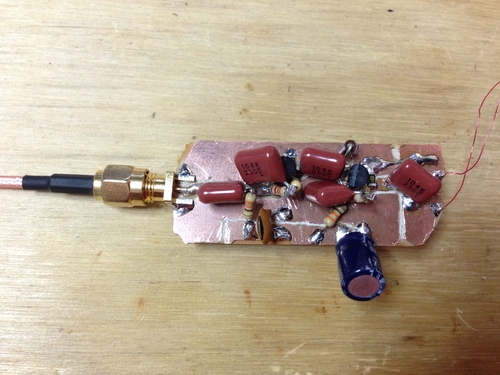

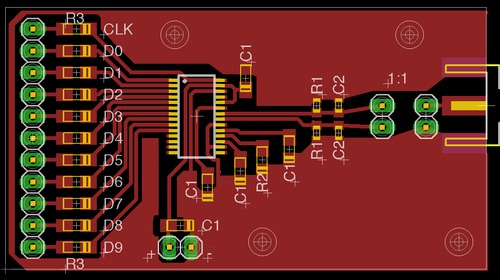

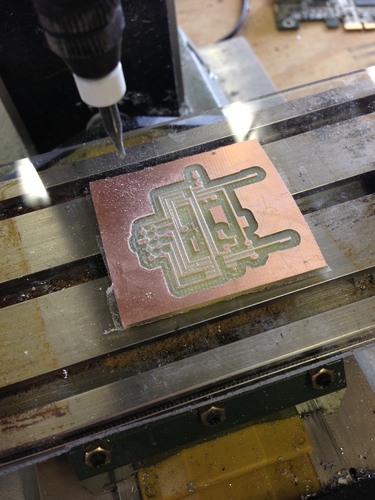

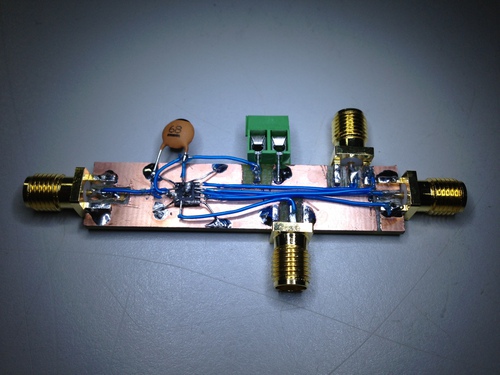

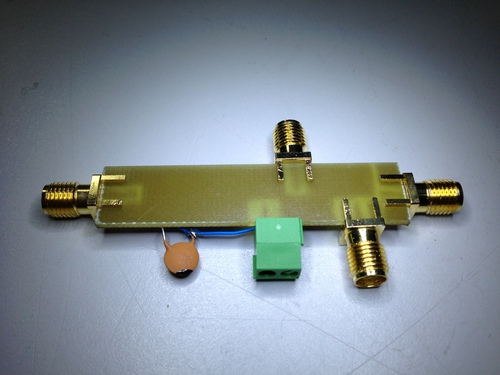

I started by laying out a super-simple protoboard for the CS5361. Since I wanted this to be quick and easy to cut on the CNC mill, the board is single-sided with the bare minimum of filtering, and without standard mixed signal board design concepts like separate analog and digital ground planes. While this will negatively affect the noise figure a bit, I wanted to get the device up and running with the minimum investment of time in case my assumptions regarding its usage and support were incorrect.

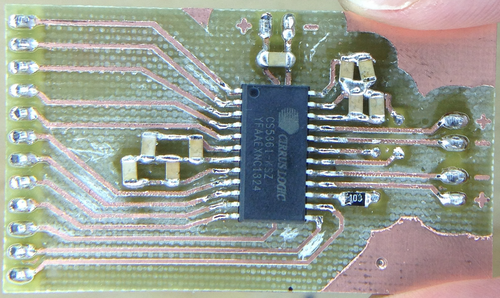

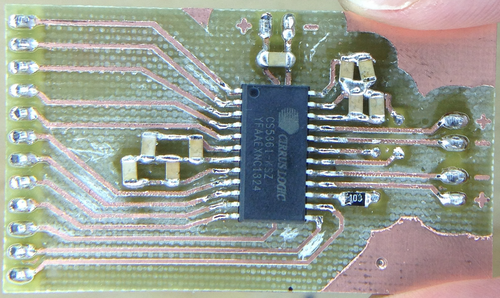

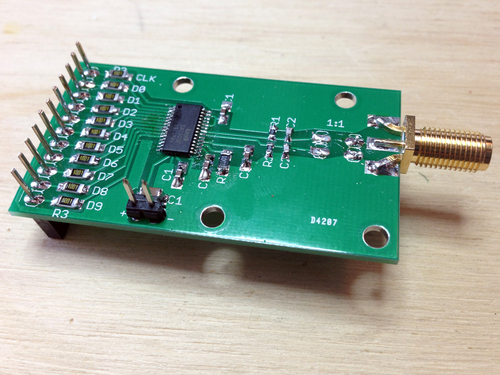

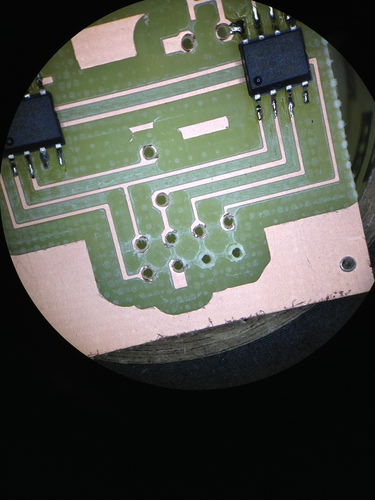

By the time I had finished the layout and cut the board, the samples order had arrived! Soldering was quick though a tad arduous - I need to use a larger 1206 footprint next time.

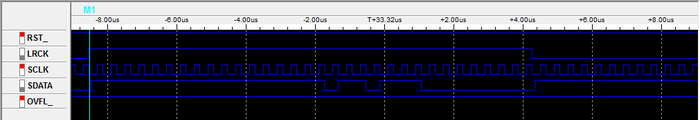

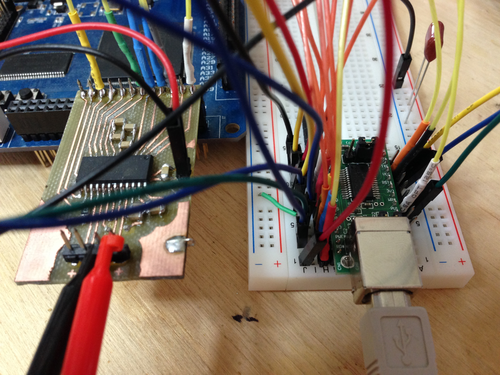

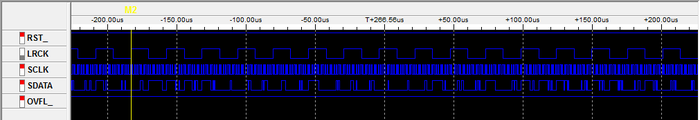

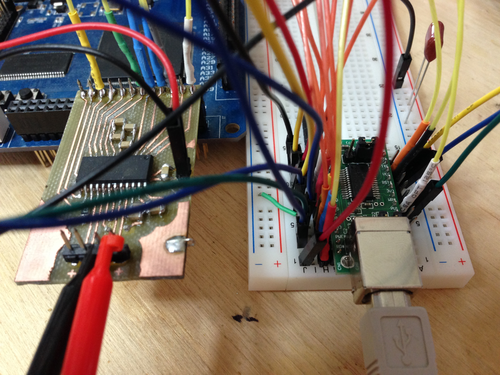

My ugly solder job notwithstanding, I plugged the unit into a breadboard, wired up an oscillator, config pins, and the logic analyzer, and was immediately rewarded with correct operation:

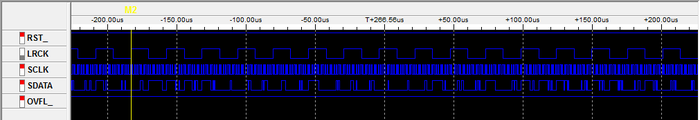

In short, by simply applying power, config pin states, and a master clock, the device will shift out ADC values (in 2's compliment form) msb-first on the falling edge of SCLK (ie, we sample on the rising edge):

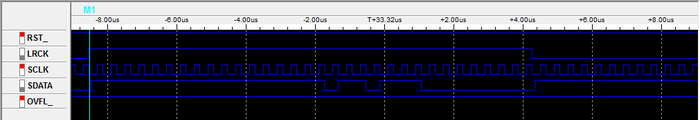

The only other line we need to worry about is LRCK, which indicates the channel being sent - you can see here that we get a left sample, then a right sample, then repeat:

With the hardware working, I set about writing the Verilog modules to nab samples from the ADC and send them off via USB (using an FT245R FIFO interface I have on hand). The code is extremely simple: on the rising edge of SCLK, samples are shifted into an 8-bit register - the size of the data bus on the USB interface. Every 8 bits, that register is strobed into the FT245R and the process repeats.

Byte alignment is provided by LRCK: on a start or reset condition from the USB host, the FPGA waits for a rising edge of LRCK before beginning the sampling and transmission of samples. This allows the client end to interpret the data stream without framing bytes: incoming data is simply shifted into 3-byte (24-bit) samples, left then right, and processed as desired.

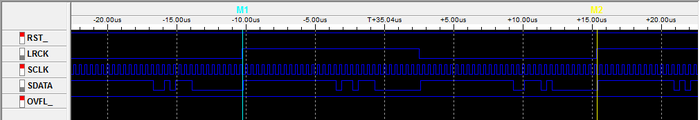

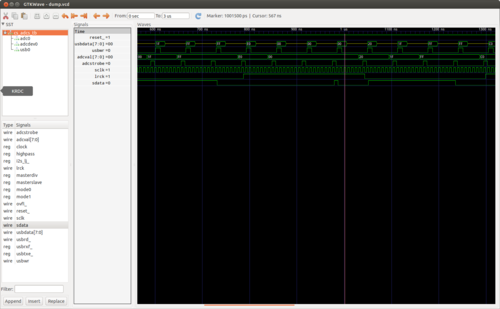

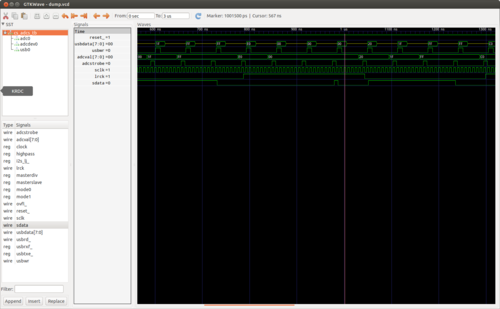

The code was quick to write and simulate with my favorite tools, iVerilog and GTKWave. I implemented a quick CS5361 module whose left and right channels count up and down (respectively) so as to simulate the rest of the pieces. Unfortunately I spent almost as much time debugging the code in hardware with the logic analyzer as I did writing the code, as Quartus (the Altera design software) is rather inconsistent in its handling of constants and register widths. (note: always read every line of output from the Altera synthesis toolchain - one can easily spend 4 hours trying to debug a problem that makes no sense only to find that the synthesis tool decided a constant was the wrong size and used zero instead)

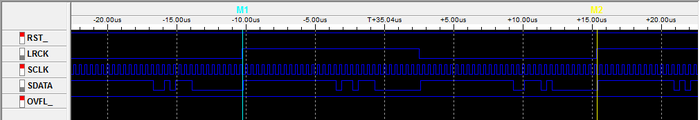

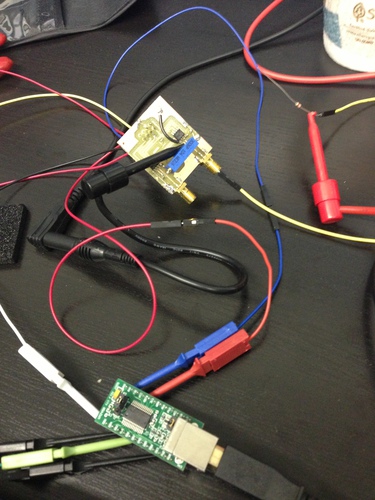

In any event, I did manage to squash the few Quartus oddities that had caused the hardware to operate differently from the simulated code, and verified that the USB interface looked correct using the logic analyzer. Time for more spaghetti:

Time to test it out. In its current state, the FPGA will assert a reset condition on any received byte from the FIFO buffer. So, we will send a reset byte then read 24 samples of 6 bytes (3 for each channel):

root@ichor ~ # echo -n 0 > /dev/ttyUSB0 && dd if=/dev/ttyUSB0 count=24 bs=6 2>/dev/null | hexdump

0000000 3238 a13f 4a68 a650 0344 bd41 6632 ceaa

0000010 78f2 28f8 9764 19ca 7185 45b4 6bfd eabf

0000020 9825 8050 65e5 6122 3adc 0830 28f0 9dfc

0000030 d026 746a e2c0 befa 57dc 1fa4 4225 7b82

0000040 a61b ed00 2320 d641 e111 b648 a2f3 8d3a

0000050 bdfb afb6 1287 5963 ff57 9c8e 63a1 3971

0000060 4bfe ac6e 5081 9fae 2b0d 5c4c 6eaa 26b7

0000070 164b 6093 8378 96d8 138c 160e e661 a81b

0000080 81bc bdae 530b ec79 3537 6ada b0cc 4773

0000090

Success! With that working, the next steps are to implement the CS5340 and add proper dynamic configuration loading. From there, I am hoping to implement ADAT input and output and a simple device driver to interface the kernel audio subsystem.

I've put the code, board design, and a bit of documentation on GitHub: jackcarrozzo/cs_adcs.

I started by laying out a super-simple protoboard for the CS5361. Since I wanted this to be quick and easy to cut on the CNC mill, the board is single-sided with the bare minimum of filtering, and without standard mixed signal board design concepts like separate analog and digital ground planes. While this will negatively affect the noise figure a bit, I wanted to get the device up and running with the minimum investment of time in case my assumptions regarding its usage and support were incorrect.

By the time I had finished the layout and cut the board, the samples order had arrived! Soldering was quick though a tad arduous - I need to use a larger 1206 footprint next time.

My ugly solder job notwithstanding, I plugged the unit into a breadboard, wired up an oscillator, config pins, and the logic analyzer, and was immediately rewarded with correct operation:

In short, by simply applying power, config pin states, and a master clock, the device will shift out ADC values (in 2's compliment form) msb-first on the falling edge of SCLK (ie, we sample on the rising edge):

The only other line we need to worry about is LRCK, which indicates the channel being sent - you can see here that we get a left sample, then a right sample, then repeat:

With the hardware working, I set about writing the Verilog modules to nab samples from the ADC and send them off via USB (using an FT245R FIFO interface I have on hand). The code is extremely simple: on the rising edge of SCLK, samples are shifted into an 8-bit register - the size of the data bus on the USB interface. Every 8 bits, that register is strobed into the FT245R and the process repeats.

Byte alignment is provided by LRCK: on a start or reset condition from the USB host, the FPGA waits for a rising edge of LRCK before beginning the sampling and transmission of samples. This allows the client end to interpret the data stream without framing bytes: incoming data is simply shifted into 3-byte (24-bit) samples, left then right, and processed as desired.

The code was quick to write and simulate with my favorite tools, iVerilog and GTKWave. I implemented a quick CS5361 module whose left and right channels count up and down (respectively) so as to simulate the rest of the pieces. Unfortunately I spent almost as much time debugging the code in hardware with the logic analyzer as I did writing the code, as Quartus (the Altera design software) is rather inconsistent in its handling of constants and register widths. (note: always read every line of output from the Altera synthesis toolchain - one can easily spend 4 hours trying to debug a problem that makes no sense only to find that the synthesis tool decided a constant was the wrong size and used zero instead)

In any event, I did manage to squash the few Quartus oddities that had caused the hardware to operate differently from the simulated code, and verified that the USB interface looked correct using the logic analyzer. Time for more spaghetti:

Time to test it out. In its current state, the FPGA will assert a reset condition on any received byte from the FIFO buffer. So, we will send a reset byte then read 24 samples of 6 bytes (3 for each channel):

root@ichor ~ # echo -n 0 > /dev/ttyUSB0 && dd if=/dev/ttyUSB0 count=24 bs=6 2>/dev/null | hexdump

0000000 3238 a13f 4a68 a650 0344 bd41 6632 ceaa

0000010 78f2 28f8 9764 19ca 7185 45b4 6bfd eabf

0000020 9825 8050 65e5 6122 3adc 0830 28f0 9dfc

0000030 d026 746a e2c0 befa 57dc 1fa4 4225 7b82

0000040 a61b ed00 2320 d641 e111 b648 a2f3 8d3a

0000050 bdfb afb6 1287 5963 ff57 9c8e 63a1 3971

0000060 4bfe ac6e 5081 9fae 2b0d 5c4c 6eaa 26b7

0000070 164b 6093 8378 96d8 138c 160e e661 a81b

0000080 81bc bdae 530b ec79 3537 6ada b0cc 4773

0000090

Success! With that working, the next steps are to implement the CS5340 and add proper dynamic configuration loading. From there, I am hoping to implement ADAT input and output and a simple device driver to interface the kernel audio subsystem.

I've put the code, board design, and a bit of documentation on GitHub: jackcarrozzo/cs_adcs.

Monday, April 21. 2014

Adventures in Linux on Sun Netra T1

I set out recently to run modern (> kernel 3.5.x) linux on a Sun Netra T1 I had laying around, and it turned into to a rather in depth process indeed. I do have a soft spot for Sparc and Sun hardware in general, and have a few of these machines that have been running FreeBSD for ages without issue. However, I was hoping to use this particular machine with a Linux kernel driver I wrote which interfaces my geiger counter, seeding the kernel random number generator with events.

In any event, I started by seeing if the Netra would netboot using DHCP, as I already had my dhcpd pointing to tftp. I dropped a Sparc Debian netboot image in TFTP at the right filename (the machine's intended IP in hex, 0A00000E in my case) but alas:

lom>poweron

lom>

LOM event: power on

Netra t1 (UltraSPARC-IIi 440MHz), No Keyboard

OpenBoot 3.10.25 ME, 256 MB memory installed, Serial #14265310.

Ethernet address 8:0:20:d9:ab:de, Host ID: 80d9abde.

Drive not ready

Boot device: net File and args:

Timeout waiting for ARP/RARP packet

Timeout waiting for ARP/RARP packet

Timeout waiting for ARP/RARP packet

Packet logs show indeed that the Netra doesn't request DHCP, only RARP:

root@elan /etc/dhcp # tcpdump -n ether host 08:00:20:d9:ab:de

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 65535 bytes

10:00:16.860634 ARP, Reverse Request who-is 08:00:20:d9:ab:de tell 08:00:20:d9:ab:de, length 50

10:00:19.519799 ARP, Reverse Request who-is 08:00:20:d9:ab:de tell 08:00:20:d9:ab:de, length 50

10:00:24.180254 ARP, Reverse Request who-is 08:00:20:d9:ab:de tell 08:00:20:d9:ab:de, length 50

OK, no problem. I installed, configured, and started rarpd:

root@elan /tftpboot/sparc # cat /etc/ethers

08:00:20:d9:ab:de sparc0

root@elan /tftpboot/sparc # grep sparc0 /etc/hosts

11.0.0.14 sparc0.priv.crepinc.com sparc0

root@elan /tftpboot/sparc # rarpd -A -v -d -b /tftpboot/sparc

Then again booted the machine:

ok boot net

Boot device: /pci@1f,0/pci@1,1/network@1,1 File and args:

a00000 Fast Data Access MMU Miss

Hmmmm. The machine begins loading the image, but stops at byte 0xa00000 and prints the above. Googling shows that this is a known bug on this hardware (see https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=658588) - apparently the Debian netboot images got too large after Lenny and Netra is no longer supported.

OK, let's try a Lenny image, and we can build the fresh kernel after install:

[ 0.000000] PROMLIB: Sun IEEE Boot Prom 'OBP 3.10.25 2000/01/17 21:26'

[ 0.000000] PROMLIB: Root node compatible: sun4u .25 2000/01/17 21:26'

[ 0.000000] Initializing cgroup subsys cpu sun4u

[ 0.000000] Linux version 2.6.26-2-sparc64 (Debian 2.6.26-29) (dannf@debian.ooorg) (gcc verrg) (gcc version 4.1.3 20080704 (prerelease) (Debian 4.1.2-25)) #1 Sun Mar 4 21:::2:17:20 UTC 2012 Linux version 2.6.26-2-sparc64 (Debian 2.6.26-29) (dannf@debian.o

Nice! The kernel boots and the installer loads but... another known bug: the SCSI controller isn't located. Sigh. I then tried a few Ubuntu versions, all of which were too large and triggered the MMU miss above.

Next on the list of possible distros (even just to use as a jumping off point to bootstrap the system) is Gentoo. I nabbed the netboot image, dropped it in the TFTP dir, and we were in business:

ok boot net

Boot device: /pci@1f,0/pci@1,1/network@1,1 File and args:

846200

PROMLIB: Sun IEEE Boot Prom 'OBP 3.10.25 2000/01/17 21:26'

PROMLIB: Root node compatible: sun4u

Linux version 2.6.32-gentoo-r7 (root@bender) (gcc version 4.3.3 (Gentoo 4.3.3 p1.0) ) #1 SMP Tue Apr 13 22:46:39 UTC 2010

[...]

Gentoo/SPARC Netboot for Sun UltraSparc Systems

Build Date: April 13, 2014

Nice! Given the number of years it's been since I built a Gentoo box, I followed the handbook for Sparc: http://www.gentoo.org/doc/en/handbook/handbook-sparc.xml. A couple notes:

(1) Due to the small size of my disk and the enormous number of files in recent portage, I kept running out of inodes while untarring. I ended up making /usr much larger than it needed to be and asking mkfs for the maximum number of inodes (-i 1024) to solve it.

(2) For some reason, the permissions of items in /dev on the install chroot were not to emerge's liking. chmod a+rw /dev/* provided the quick fix (for obvious reasons, don't do that on any real system).

(3) The kernel I built was a bit too large, though as the handbook specifies, I was able to strip it down to the required size:

(chroot) netboot linux # du -hs vmlinux

7.8M vmlinux

(chroot) netboot linux # strip -R .comment -R .note vmlinux

(chroot) netboot linux # du -hs vmlinux

5.7M vmlinux

(4) I had originally compiled in Open Boot PROM to the kernel, however probing at boot would lock the machine:

[ 40.238603] /pci@1f,0/pci@1,1/ebus@1/flashprom@10,0: OBP Flash, RD 1fff0000000[100000] WR 1fff0000000[100000]

[ 40.369577] /pci@1f,0/pci@1,1/ebus@1/flashprom@10,400000: OBP Flash, RD 1fff0400000[200000] WR 1fff0400000[200000]

[ 40.505971] sd 0:0:0:0: [sda] Write cache: disabled, read cache: enabled, supports DPO and FUA

[ 40.619440] flash: probe of f0084fe0 failed with error -16

[ 40.691610] /pci@1f,0/pci@1,1/ebus@1/flashprom@10,800000: OBP Flash, RD 1fff0800000[200000] WR 1fff0800000[200000]

[ 40.827856] flash: probe of f0085178 failed with error -16

[ 64.439127] BUG: soft lockup - CPU#0 stuck for 23s! [swapper:1]

[...]

[ 65.911690] I7:

[ 65.963206] Call Trace:

[ 65.995345] [000000000099b2ec] openprom_init+0x8/0x78

[ 66.062946] [0000000000426bcc] do_one_initcall+0xec/0x140

[ 66.135131] [0000000000976914] kernel_init_freeable+0xfc/0x1a0

[ 66.213000] [00000000007dce44] kernel_init+0x4/0x100

[ 66.279456] [0000000000405f84] ret_from_fork+0x1c/0x2c

[ 66.348175] [0000000000000000] (null)

Disabling "/dev/openprom Device Support", "openprom /proc Entry", and "OBP Flash Device Support" from the kernel config solved the issue - I didn't bother tracking down exactly which module was the problem as I don't need openprom support, but presumably it could be determined quickly.

After rebuilding the kernel and initramfs, it boots successfully!

jackc@sparc0 ~ $ uname -a

Linux sparc0 3.12.13-jackc-v2 #2 Wed Apr 9 21:27:52 EDT 2014 sparc64 sun4u TI UltraSparc IIi (Sabre) GNU/Linux

One strange thing of note is that Gentoo went to a multilib system for Sparc in recent versions - that is, 64 bit kernel, 32 bit userland. (see http://www.gentoo.org/proj/en/base/sparc/multilib.xml)

jackc@sparc0 ~ $ file /boot/kernel-3.12.13-jackc-v2

/boot/kernel-3.12.13-gentoo-v1: ELF 64-bit MSB executable, SPARC V9, Sun UltraSPARC1 Extensions Required, relaxed memory ordering, version 1 (SYSV), statically linked, BuildID[sha1]=46a9535b4e050fbbdfdfe71cba5795502d127eb2, with unknown capability 0x410000000f676e75 = 0x1000000070433, not stripped

jackc@sparc0 ~ $ file /bin/ls

/bin/ls: ELF 32-bit MSB executable, SPARC32PLUS, V8+ Required, version 1 (SYSV), dynamically linked (uses shared libs), for GNU/Linux 2.6.16, stripped

Both 32- and 64- bit binaries can be compiled and run using the -m32 or -m64 flags, respectively, to sparc-unknown-linux-gnu-gcc.

Another thing of note is that the machine will hard lock under high load, such as compiling kernels. It just so happens a thread came up on the sparclinux kernel mailinglist about a memory management bug just recently, with a few patches slated to be merged into mainline: see http://marc.info/?t=139934944100001&r=1&w=2. I'll hopefully get around to applying and testing the patches in the near future.

In any event, I started by seeing if the Netra would netboot using DHCP, as I already had my dhcpd pointing to tftp. I dropped a Sparc Debian netboot image in TFTP at the right filename (the machine's intended IP in hex, 0A00000E in my case) but alas:

lom>poweron

lom>

LOM event: power on

Netra t1 (UltraSPARC-IIi 440MHz), No Keyboard

OpenBoot 3.10.25 ME, 256 MB memory installed, Serial #14265310.

Ethernet address 8:0:20:d9:ab:de, Host ID: 80d9abde.

Drive not ready

Boot device: net File and args:

Timeout waiting for ARP/RARP packet

Timeout waiting for ARP/RARP packet

Timeout waiting for ARP/RARP packet

Packet logs show indeed that the Netra doesn't request DHCP, only RARP:

root@elan /etc/dhcp # tcpdump -n ether host 08:00:20:d9:ab:de

tcpdump: verbose output suppressed, use -v or -vv for full protocol decode

listening on eth0, link-type EN10MB (Ethernet), capture size 65535 bytes

10:00:16.860634 ARP, Reverse Request who-is 08:00:20:d9:ab:de tell 08:00:20:d9:ab:de, length 50

10:00:19.519799 ARP, Reverse Request who-is 08:00:20:d9:ab:de tell 08:00:20:d9:ab:de, length 50

10:00:24.180254 ARP, Reverse Request who-is 08:00:20:d9:ab:de tell 08:00:20:d9:ab:de, length 50

OK, no problem. I installed, configured, and started rarpd:

root@elan /tftpboot/sparc # cat /etc/ethers

08:00:20:d9:ab:de sparc0

root@elan /tftpboot/sparc # grep sparc0 /etc/hosts

11.0.0.14 sparc0.priv.crepinc.com sparc0

root@elan /tftpboot/sparc # rarpd -A -v -d -b /tftpboot/sparc

Then again booted the machine:

ok boot net

Boot device: /pci@1f,0/pci@1,1/network@1,1 File and args:

a00000 Fast Data Access MMU Miss

Hmmmm. The machine begins loading the image, but stops at byte 0xa00000 and prints the above. Googling shows that this is a known bug on this hardware (see https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=658588) - apparently the Debian netboot images got too large after Lenny and Netra is no longer supported.

OK, let's try a Lenny image, and we can build the fresh kernel after install:

[ 0.000000] PROMLIB: Sun IEEE Boot Prom 'OBP 3.10.25 2000/01/17 21:26'

[ 0.000000] PROMLIB: Root node compatible: sun4u .25 2000/01/17 21:26'

[ 0.000000] Initializing cgroup subsys cpu sun4u

[ 0.000000] Linux version 2.6.26-2-sparc64 (Debian 2.6.26-29) (dannf@debian.ooorg) (gcc verrg) (gcc version 4.1.3 20080704 (prerelease) (Debian 4.1.2-25)) #1 Sun Mar 4 21:::2:17:20 UTC 2012 Linux version 2.6.26-2-sparc64 (Debian 2.6.26-29) (dannf@debian.o

Nice! The kernel boots and the installer loads but... another known bug: the SCSI controller isn't located. Sigh. I then tried a few Ubuntu versions, all of which were too large and triggered the MMU miss above.

Next on the list of possible distros (even just to use as a jumping off point to bootstrap the system) is Gentoo. I nabbed the netboot image, dropped it in the TFTP dir, and we were in business:

ok boot net

Boot device: /pci@1f,0/pci@1,1/network@1,1 File and args:

846200

PROMLIB: Sun IEEE Boot Prom 'OBP 3.10.25 2000/01/17 21:26'

PROMLIB: Root node compatible: sun4u

Linux version 2.6.32-gentoo-r7 (root@bender) (gcc version 4.3.3 (Gentoo 4.3.3 p1.0) ) #1 SMP Tue Apr 13 22:46:39 UTC 2010

[...]

Gentoo/SPARC Netboot for Sun UltraSparc Systems

Build Date: April 13, 2014

Nice! Given the number of years it's been since I built a Gentoo box, I followed the handbook for Sparc: http://www.gentoo.org/doc/en/handbook/handbook-sparc.xml. A couple notes:

(1) Due to the small size of my disk and the enormous number of files in recent portage, I kept running out of inodes while untarring. I ended up making /usr much larger than it needed to be and asking mkfs for the maximum number of inodes (-i 1024) to solve it.

(2) For some reason, the permissions of items in /dev on the install chroot were not to emerge's liking. chmod a+rw /dev/* provided the quick fix (for obvious reasons, don't do that on any real system).

(3) The kernel I built was a bit too large, though as the handbook specifies, I was able to strip it down to the required size:

(chroot) netboot linux # du -hs vmlinux

7.8M vmlinux

(chroot) netboot linux # strip -R .comment -R .note vmlinux

(chroot) netboot linux # du -hs vmlinux

5.7M vmlinux

(4) I had originally compiled in Open Boot PROM to the kernel, however probing at boot would lock the machine:

[ 40.238603] /pci@1f,0/pci@1,1/ebus@1/flashprom@10,0: OBP Flash, RD 1fff0000000[100000] WR 1fff0000000[100000]

[ 40.369577] /pci@1f,0/pci@1,1/ebus@1/flashprom@10,400000: OBP Flash, RD 1fff0400000[200000] WR 1fff0400000[200000]

[ 40.505971] sd 0:0:0:0: [sda] Write cache: disabled, read cache: enabled, supports DPO and FUA

[ 40.619440] flash: probe of f0084fe0 failed with error -16

[ 40.691610] /pci@1f,0/pci@1,1/ebus@1/flashprom@10,800000: OBP Flash, RD 1fff0800000[200000] WR 1fff0800000[200000]

[ 40.827856] flash: probe of f0085178 failed with error -16

[ 64.439127] BUG: soft lockup - CPU#0 stuck for 23s! [swapper:1]

[...]

[ 65.911690] I7:

[ 65.963206] Call Trace:

[ 65.995345] [000000000099b2ec] openprom_init+0x8/0x78

[ 66.062946] [0000000000426bcc] do_one_initcall+0xec/0x140

[ 66.135131] [0000000000976914] kernel_init_freeable+0xfc/0x1a0

[ 66.213000] [00000000007dce44] kernel_init+0x4/0x100

[ 66.279456] [0000000000405f84] ret_from_fork+0x1c/0x2c

[ 66.348175] [0000000000000000] (null)

Disabling "/dev/openprom Device Support", "openprom /proc Entry", and "OBP Flash Device Support" from the kernel config solved the issue - I didn't bother tracking down exactly which module was the problem as I don't need openprom support, but presumably it could be determined quickly.

After rebuilding the kernel and initramfs, it boots successfully!

jackc@sparc0 ~ $ uname -a

Linux sparc0 3.12.13-jackc-v2 #2 Wed Apr 9 21:27:52 EDT 2014 sparc64 sun4u TI UltraSparc IIi (Sabre) GNU/Linux

One strange thing of note is that Gentoo went to a multilib system for Sparc in recent versions - that is, 64 bit kernel, 32 bit userland. (see http://www.gentoo.org/proj/en/base/sparc/multilib.xml)

jackc@sparc0 ~ $ file /boot/kernel-3.12.13-jackc-v2

/boot/kernel-3.12.13-gentoo-v1: ELF 64-bit MSB executable, SPARC V9, Sun UltraSPARC1 Extensions Required, relaxed memory ordering, version 1 (SYSV), statically linked, BuildID[sha1]=46a9535b4e050fbbdfdfe71cba5795502d127eb2, with unknown capability 0x410000000f676e75 = 0x1000000070433, not stripped

jackc@sparc0 ~ $ file /bin/ls

/bin/ls: ELF 32-bit MSB executable, SPARC32PLUS, V8+ Required, version 1 (SYSV), dynamically linked (uses shared libs), for GNU/Linux 2.6.16, stripped

Both 32- and 64- bit binaries can be compiled and run using the -m32 or -m64 flags, respectively, to sparc-unknown-linux-gnu-gcc.

Another thing of note is that the machine will hard lock under high load, such as compiling kernels. It just so happens a thread came up on the sparclinux kernel mailinglist about a memory management bug just recently, with a few patches slated to be merged into mainline: see http://marc.info/?t=139934944100001&r=1&w=2. I'll hopefully get around to applying and testing the patches in the near future.

Thursday, February 20. 2014

WWVB Receiver and Testing Transmitter

As I've been making steady progress on my Z80 computer, I've put a lot of thought into something to actually do with it when it's done. As tempting as it is to load up CP/M, add a video interface, and use it as my main machine, I think porting Firefox is too ambitious. For now, I've decided to add some more 7-segment displays and an RF front end to decode the WWVB time signal from an atomic clock in Colorado and hang it on the wall. If I'm still feeling ambitious, I'll add an Ethernet controller and write a driver and NTP daemon for it. Of course, this means I need a working receiver front end for 60kHz.

The WWVB signal is quite simple as far as decoding goes: the carrier has "full power" and "low power" states. The transmitter drops the carrier by 17 dB at the start of each second, and the length of time before it returns provides a single trinary value: Mark (0.8s), Not Set (0.2s), or Set (0.5s). A single digital line that goes low or high to follow the transmitter power state is quite easy to achieve with a fully analog circuit: after a bandpass to select only the 60kHz carrier, we send the signal into two peak detectors - one with a long time constant (low pass filter) to track the average signal power over several seconds, and one with a shorter time constant to track the per-second changes. Passing these two signals to a comparator, we get our nice single-bit TTL indicator of the current signal state. Plus, the longer time constant peak detector acts as an auto gain control, so no alignment is required.

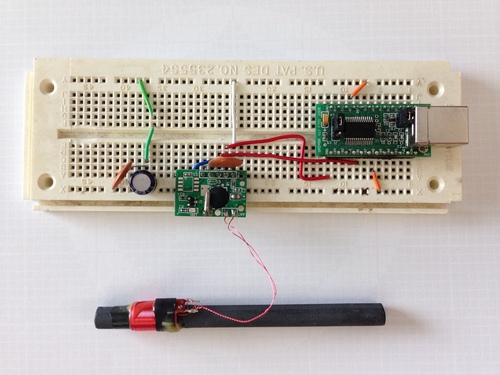

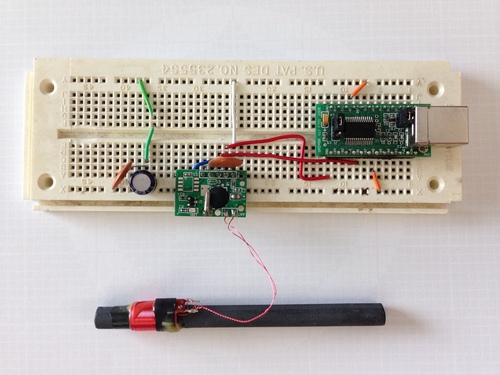

While I will make my own receiver as described above for the final version, I ordered a SYM-RFT module for testing. It includes an LED to indicate received carrier status and outputs the inverted carrier power state compared to the average carrier power. I quickly breadboarded it up to an FT245R USB chip and wrote a few lines of C to poll the power status and measure the length of state changes. Unfortunately, New England being quite far from Colorado, the signal is below the noise floor and has thus far been highly unreliable. The module simply flashes chaotically in the presence of any electronic noise like my monitors, and does nothing in isolation.

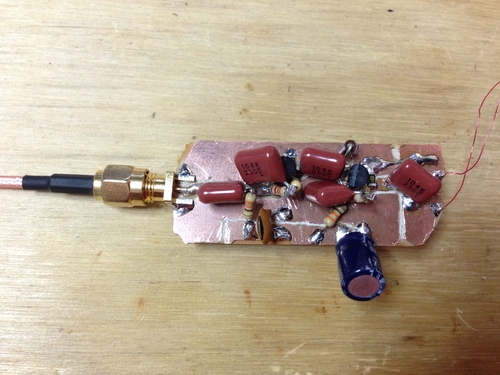

I built the above two-stage preamp from two 2N3904's to attach to the 60kHz antenna that came with the SYM-RFT module to see if I could see any carrier on the oscilloscope. While I did get all sorts of noise and impulses, there was very little if any energy at 60kHz. Drat.

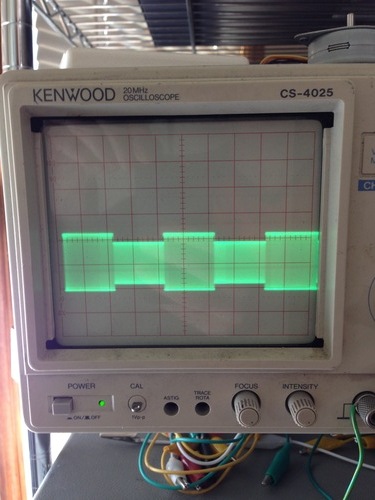

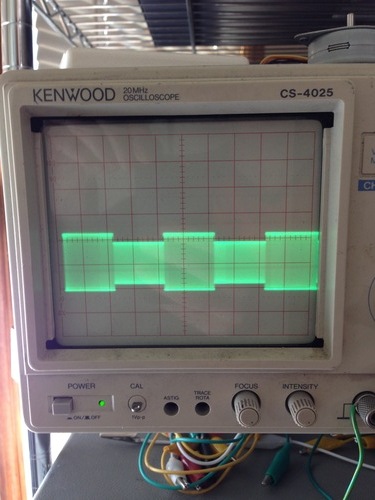

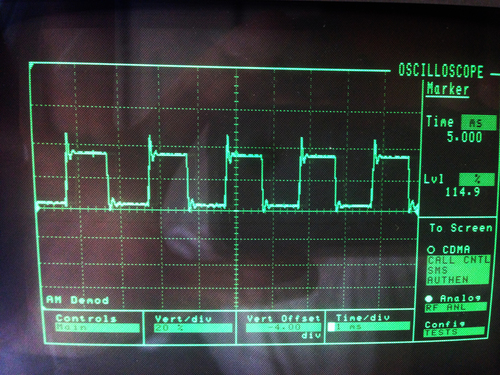

Luckily, I have the tools on hand to recreate the signal so I can at least test my receiver and code. I pulled out the FPGA RF DAC board I put together a while back, and a page of Verilog later I had a compliant WWVB transmitter:

After a small battle with PLLs to get a slow enough clock for 60khz, both the SYM-RFT and my USB worked fine.

jackc@ichor ~/Projects/wwvb_usb $ sudo ./wwvb

MARK : 950 ms

NOT SET : 239 ms

SET : 503 ms

NOT SET : 194 ms

SET : 581 ms

MARK : 991 ms

^Cjackc@ichor ~/Projects/wwvb_usb $

The WWVB signal is quite simple as far as decoding goes: the carrier has "full power" and "low power" states. The transmitter drops the carrier by 17 dB at the start of each second, and the length of time before it returns provides a single trinary value: Mark (0.8s), Not Set (0.2s), or Set (0.5s). A single digital line that goes low or high to follow the transmitter power state is quite easy to achieve with a fully analog circuit: after a bandpass to select only the 60kHz carrier, we send the signal into two peak detectors - one with a long time constant (low pass filter) to track the average signal power over several seconds, and one with a shorter time constant to track the per-second changes. Passing these two signals to a comparator, we get our nice single-bit TTL indicator of the current signal state. Plus, the longer time constant peak detector acts as an auto gain control, so no alignment is required.

While I will make my own receiver as described above for the final version, I ordered a SYM-RFT module for testing. It includes an LED to indicate received carrier status and outputs the inverted carrier power state compared to the average carrier power. I quickly breadboarded it up to an FT245R USB chip and wrote a few lines of C to poll the power status and measure the length of state changes. Unfortunately, New England being quite far from Colorado, the signal is below the noise floor and has thus far been highly unreliable. The module simply flashes chaotically in the presence of any electronic noise like my monitors, and does nothing in isolation.

I built the above two-stage preamp from two 2N3904's to attach to the 60kHz antenna that came with the SYM-RFT module to see if I could see any carrier on the oscilloscope. While I did get all sorts of noise and impulses, there was very little if any energy at 60kHz. Drat.

Luckily, I have the tools on hand to recreate the signal so I can at least test my receiver and code. I pulled out the FPGA RF DAC board I put together a while back, and a page of Verilog later I had a compliant WWVB transmitter:

After a small battle with PLLs to get a slow enough clock for 60khz, both the SYM-RFT and my USB worked fine.

jackc@ichor ~/Projects/wwvb_usb $ sudo ./wwvb

MARK : 950 ms

NOT SET : 239 ms

SET : 503 ms

NOT SET : 194 ms

SET : 581 ms

MARK : 991 ms

^Cjackc@ichor ~/Projects/wwvb_usb $

Tuesday, January 14. 2014

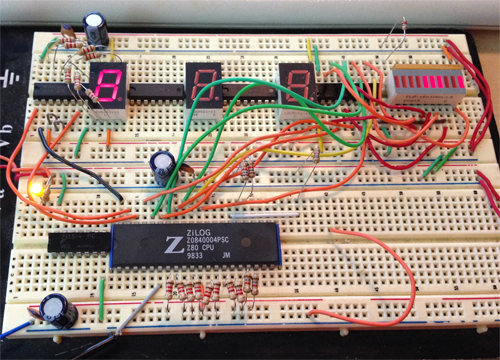

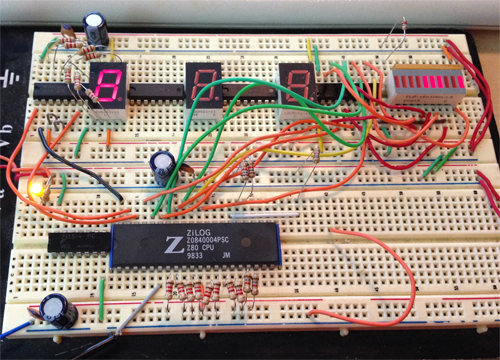

Z80 Computer and Assembler Adventures

I recently pulled out the Z80 project computer I built several years ago in the hopes of adding some hardware and features to it, but was sad to find that it didn't want to operate in the slightest. A quick connection of the logic analyzer showed that the CPU was not fetching instructions. Hrm. I needed to determine if my delicate, hand-wired little computer was at fault somewhere, or if the CPU itself was upset.

First, I breadboarded the following setup to test the Z80. Since the opcode for the NOP instruction is 0x00, setting the data bus to zeros and providing correct external state causes the chip to simply count up the address bus when clocked. I pulled the data bus to 0s with 1k resistors since the chip is in an undefined state before reset and could attempt to write to the bus - bad news if you're tied to a rail. I then set WAIT_, INT_, NMI_, and BUSREQ_, leaving just a RESET_ and a clock source to add.

I really like the simplicity of Z80: not only can I run the chip with only four pullups and no external components, but it will run with clock speeds all the way down to DC. That means you can single step your computer with a debounced push button as your clock - try that on a modern CPU! For this particular exercise, I rigged a 555 timer to generate a 10hz clock - slow enough to watch the address bus with LEDs.

At the moment of truth, I got... nothing. The chip was tri-stating the address bus and not responding to RESET_. Unfortunately this meant I needed to dig deep into the depths to locate my box of Z80 items that I hadn't seen since college. However, not only did I find the box I was looking for quite quickly, I found a downright awesome pile of parts in there! I recalled that I had 3 extra Z80s and two 82C55's, but in reality I owned 16 Z80s of different vintages and speed grades, a few different types of 82C55, and an awesome pile of Z84 family peripheral controllers. I was very pleased.

Back at my desk, I tested all 17 CPUs (the one that was in my computer, plus the 16 extra ones I had). It turns out that all of my spares are in perfect working condition, and the one in the computer was in fact dead as a door nail. Good to know!

Next, I put a working CPU back in the computer, then wrote, assembled (using z80asm-1.2), and burned the following test program into flash:

LD A,0x80 ; 1000 0000 - tell the 82C55 to set all ports to outputs

OUT (3),A ; 0x03 is the control word address

LD A,1 ; set one bit that we will rotate in the loop

loop: OUT (0),A ; put the word out on port A of the 82C55

NOP

RLCA ; left-rotate reg A

JP loop

Sure enough, it worked great in hardware. Unfortunately, I decided to test the code in an emulator before running the hardware, and ended up spending many hours debugging and rewriting the emulator's memory loading functionality to be properly endian-safe and to autodetect binary blob types, but I'll leave that rant for another time. In any event, this was a great refresher in Z80 architecture and assembly as well as the interworkings of the emulator. Now that my Z80 computer is working, I have no excuse not to add more cool things to the project...

First, I breadboarded the following setup to test the Z80. Since the opcode for the NOP instruction is 0x00, setting the data bus to zeros and providing correct external state causes the chip to simply count up the address bus when clocked. I pulled the data bus to 0s with 1k resistors since the chip is in an undefined state before reset and could attempt to write to the bus - bad news if you're tied to a rail. I then set WAIT_, INT_, NMI_, and BUSREQ_, leaving just a RESET_ and a clock source to add.

I really like the simplicity of Z80: not only can I run the chip with only four pullups and no external components, but it will run with clock speeds all the way down to DC. That means you can single step your computer with a debounced push button as your clock - try that on a modern CPU! For this particular exercise, I rigged a 555 timer to generate a 10hz clock - slow enough to watch the address bus with LEDs.

At the moment of truth, I got... nothing. The chip was tri-stating the address bus and not responding to RESET_. Unfortunately this meant I needed to dig deep into the depths to locate my box of Z80 items that I hadn't seen since college. However, not only did I find the box I was looking for quite quickly, I found a downright awesome pile of parts in there! I recalled that I had 3 extra Z80s and two 82C55's, but in reality I owned 16 Z80s of different vintages and speed grades, a few different types of 82C55, and an awesome pile of Z84 family peripheral controllers. I was very pleased.

Back at my desk, I tested all 17 CPUs (the one that was in my computer, plus the 16 extra ones I had). It turns out that all of my spares are in perfect working condition, and the one in the computer was in fact dead as a door nail. Good to know!

Next, I put a working CPU back in the computer, then wrote, assembled (using z80asm-1.2), and burned the following test program into flash:

LD A,0x80 ; 1000 0000 - tell the 82C55 to set all ports to outputs

OUT (3),A ; 0x03 is the control word address

LD A,1 ; set one bit that we will rotate in the loop

loop: OUT (0),A ; put the word out on port A of the 82C55

NOP

RLCA ; left-rotate reg A

JP loop

Sure enough, it worked great in hardware. Unfortunately, I decided to test the code in an emulator before running the hardware, and ended up spending many hours debugging and rewriting the emulator's memory loading functionality to be properly endian-safe and to autodetect binary blob types, but I'll leave that rant for another time. In any event, this was a great refresher in Z80 architecture and assembly as well as the interworkings of the emulator. Now that my Z80 computer is working, I have no excuse not to add more cool things to the project...

Monday, November 18. 2013

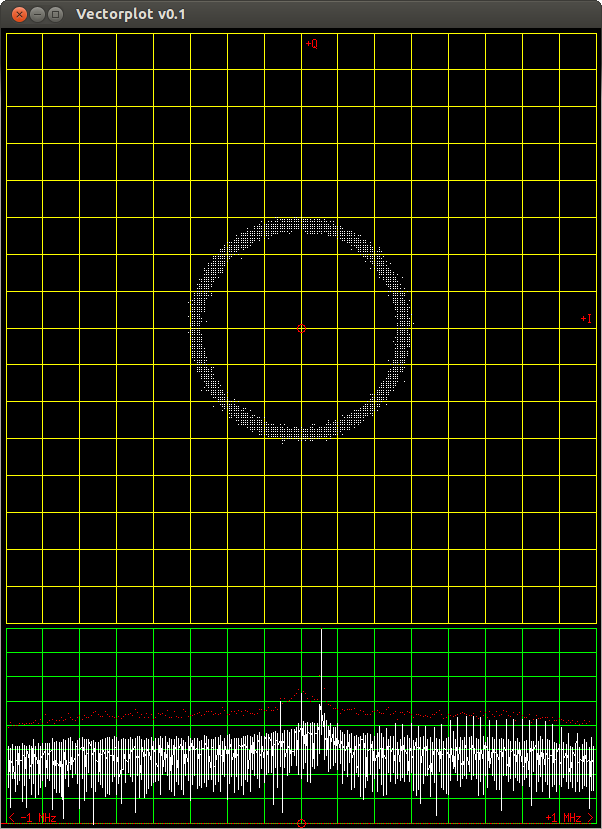

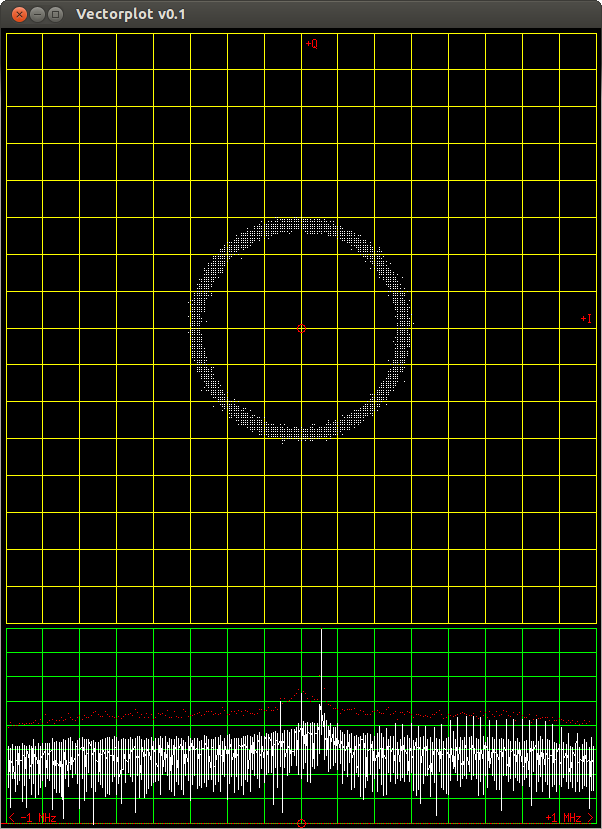

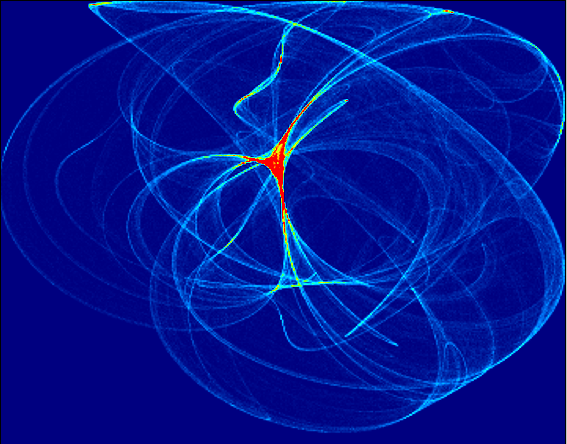

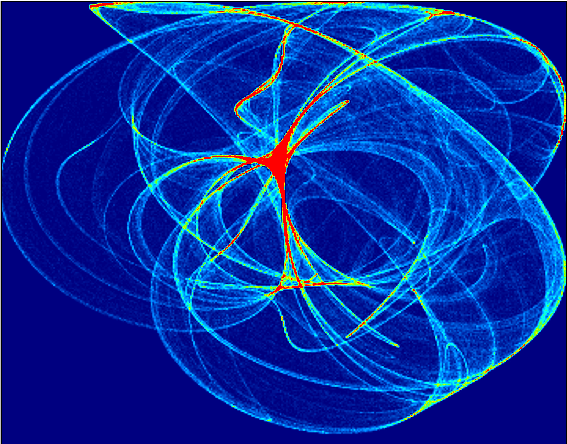

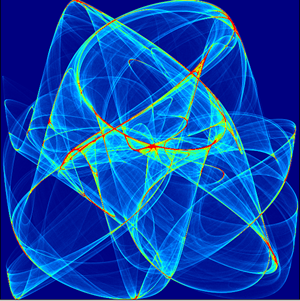

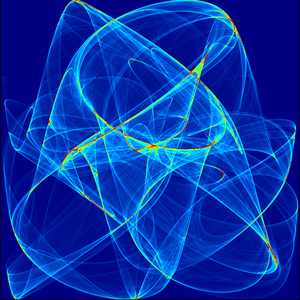

Vectorplot

Since I don't own a vector analyzer, I've been working on a software implementation for the RTL SDR in flat C (using libusb1) and X11 so I can test my FPGA QAM code. It actually turned out to be much simpler than expected, and is working well thus far.

Right now it plots raw I and Q values on the plane as well as calculated FFT data. Since I haven't implemented clock recovery yet, signals appear as a circle (recall that for a frequency difference of N hz between the incoming signal and the sampling frequency, an N hz rotation around the IQ plane results - thus even a difference of a few hz is too quick to see anything but a circle). That shouldn't be very hard, and at that point it will become fully usable as a vector analyzer.

Code is here: https://github.com/jackcarrozzo/vectorplot.

Right now it plots raw I and Q values on the plane as well as calculated FFT data. Since I haven't implemented clock recovery yet, signals appear as a circle (recall that for a frequency difference of N hz between the incoming signal and the sampling frequency, an N hz rotation around the IQ plane results - thus even a difference of a few hz is too quick to see anything but a circle). That shouldn't be very hard, and at that point it will become fully usable as a vector analyzer.

Code is here: https://github.com/jackcarrozzo/vectorplot.

Tuesday, October 22. 2013

Gyro-Stabilized DSLR Platform

I finally had time to put together one axis of my DSLR gyro frame today, and I am quite pleased with the results. The frame is big and heavy as it was originally intended to be a fixed-mount azimuth-altitude star and satellite rig for taking long exposures of planets and orbiting things, but it happened to be a good test bed for my gyro code. It's rock solid thus far:

Hopefully I will find time to build another axis soon so I can really see how solid both my math and my sensor are. I have a huge list of epic features to add to the project, too. I'm hoping I can get it solid enough to film with a 300mm lens and not need post-process stabilization.

Hopefully I will find time to build another axis soon so I can really see how solid both my math and my sensor are. I have a huge list of epic features to add to the project, too. I'm hoping I can get it solid enough to film with a 300mm lens and not need post-process stabilization.

Tuesday, June 4. 2013

FPGA QAM Generation

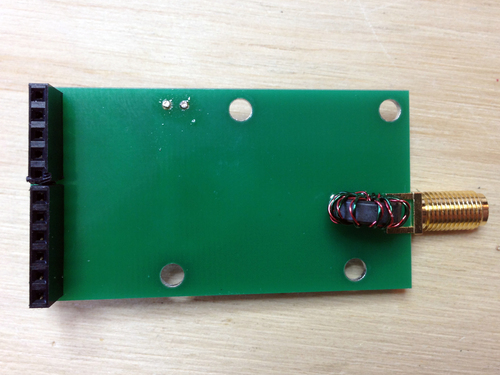

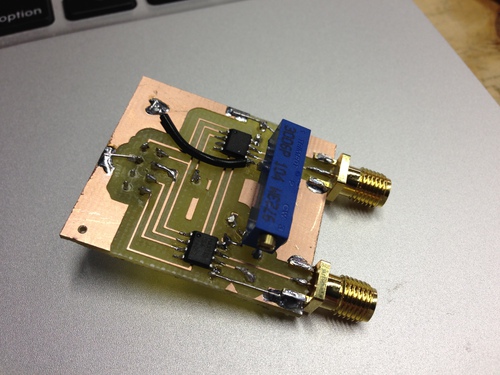

I recently drew up a board to connect a fast DAC to FPGA dev boards for the purpose of RF generation. I chose the DAC900E from TI as it's fast (165MS/s), pretty accurate (10-bit), and extremely easy to interface (latches values in on clock edges, doesn't require any setup or external stuff).

Normally I'd cut a simple one-layer board like this on my CNC mill, but I wanted to try a low cost hobbyist PCB fab I read about via Sparkfun. The did take a very long while, but came out beautifully. Also, when the boards finally arrived, I was pleased I had picked a DAC with so few external requirements to solder. The resistors are there to prevent an output buffer short in case I soldered badly, by the way - I don't own a hot air rework setup.

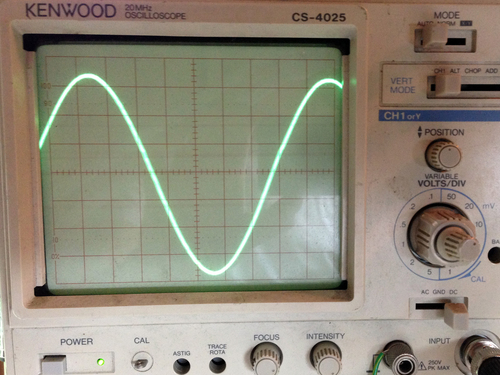

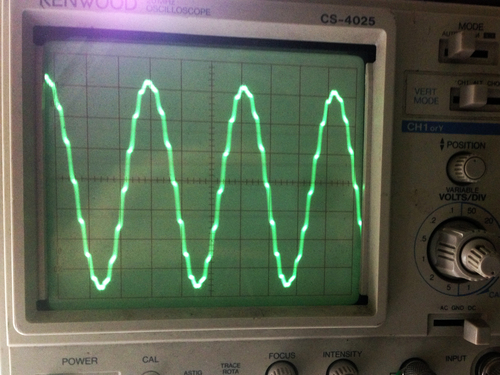

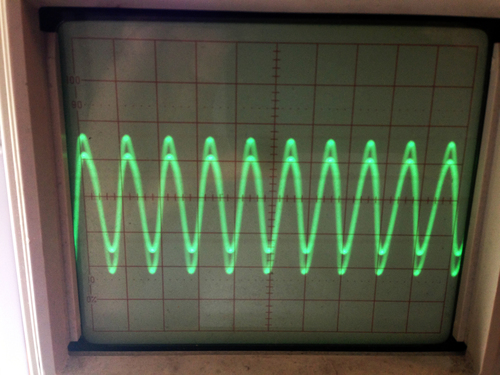

Right off the bat, I was pleased to see that my sine-generation test code worked great at various frequencies and bit depths (aliasing visible in the 2nd pic, its harmonics in the 3rd):

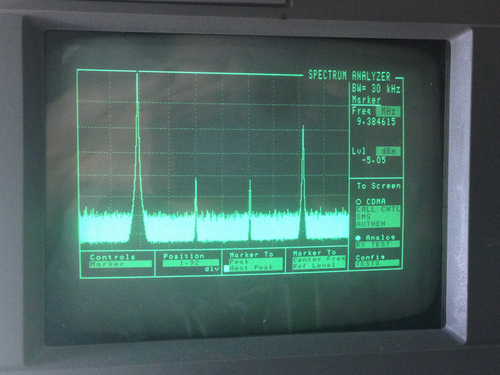

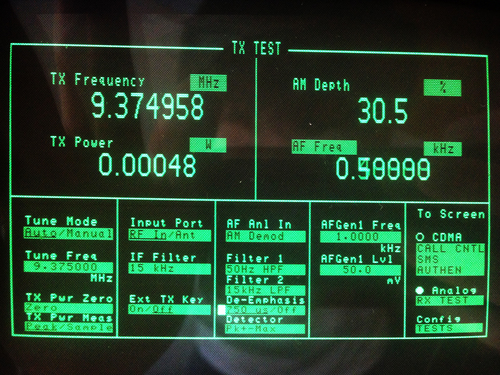

With a bit more hacking, I got the FT245R interface to work such that I send I and Q values via USB, the output value is calculated from an LUT (I originally was doing the math out, but hardware multiply is very slow), and sent to the DAC. In these pics, you can see that I was generating a 500-hz tone over an AM carrier at 9.375 MHz (the 3rd image is the AM-demodulated signal shown on the spectrum analyzer).

My code is on Github here. It's by no means robust or feature-rich, but it does work well in my opinion. When I have time, I'd like to see how far I can take this design. Assuming I don't find any unforseen roadblocks, the next step will be to make a board with two DAC900Es for I and Q generation that feed into a GHz-range QAM modulator that takes its carrier from a PLL on the FPGA, which will theoretically give me full TX control over a wide range of freqs.

Normally I'd cut a simple one-layer board like this on my CNC mill, but I wanted to try a low cost hobbyist PCB fab I read about via Sparkfun. The did take a very long while, but came out beautifully. Also, when the boards finally arrived, I was pleased I had picked a DAC with so few external requirements to solder. The resistors are there to prevent an output buffer short in case I soldered badly, by the way - I don't own a hot air rework setup.

Right off the bat, I was pleased to see that my sine-generation test code worked great at various frequencies and bit depths (aliasing visible in the 2nd pic, its harmonics in the 3rd):

With a bit more hacking, I got the FT245R interface to work such that I send I and Q values via USB, the output value is calculated from an LUT (I originally was doing the math out, but hardware multiply is very slow), and sent to the DAC. In these pics, you can see that I was generating a 500-hz tone over an AM carrier at 9.375 MHz (the 3rd image is the AM-demodulated signal shown on the spectrum analyzer).

My code is on Github here. It's by no means robust or feature-rich, but it does work well in my opinion. When I have time, I'd like to see how far I can take this design. Assuming I don't find any unforseen roadblocks, the next step will be to make a board with two DAC900Es for I and Q generation that feed into a GHz-range QAM modulator that takes its carrier from a PLL on the FPGA, which will theoretically give me full TX control over a wide range of freqs.

Monday, March 25. 2013

Altera Quartus 12.1SP1 on Ubuntu 12.04 x86_64

I've been using Quartus in a virtual machine on my desktop since I hadn't been able to get it working on my 64 bit desktop. When I began using iVerilog and GtkWave for synthesis and simulation, my workflow efficiency went way up as I could code and debug everything in vim, then move to the annoying Quartus GUI app just to add pins and program a device. I then wrote a Makefile wrapper for the Quartus CLI tools, so once my pins were assigned I could make && make prog. However, it was still annoying that I had to boot a VM to program the device (as well as battle libvirt's USB passthrough, which works great except when it doesn't.)

Finally, I got Quartus to run properly on my desktop. I'm even more pleased than I expected to be, because it works great without the ia32-libs package (32-bit libraries... while theoretically it shouldn't break anything, I've had wierd issues in the past with it.) There are lots of older docs on Google that describe this, but they are all old and still talk about the USBfs issue (Ubuntu dropped it from their default kernel, and it took Altera a while to switch to the new /sys/bus/usb/ system. Luckily for me however, it turns out it's quite simple now, albeit a little ghetto (output from the scripts removed for clarity):

root@moose /tmp # tar xfz /home/jackc/Downloads/12.1sp1_243_quartus_free_linux.tar.gz

root@moose /tmp # cd 12.1sp1_243_quartus_free_linux/linux_installer/quartus_free

root@moose /tmp/12.1sp1_243_quartus_free_linux/linux_installer/quartus_free # ./install --auto /usr/local/altera/12.1sp1_243

root@moose /tmp/12.1sp1_243_quartus_free_linux/linux_installer/quartus_free # cd ../quartus_free_64bit/

root@moose /tmp/12.1sp1_243_quartus_free_linux/linux_installer/quartus_free_64bit # ./install --auto /usr/local/altera/12.1sp1_243

root@moose /tmp/12.1sp1_243_quartus_free_linux/linux_installer/quartus_free_64bit # cd

root@moose ~ # for x in /tmp/12.1sp1_243_quartus_free_linux/devices/web/*.qda; do LD_LIBRARY_PATH=/usr/local/altera/12.1sp1_243/quartus/linux64 /usr/local/altera/12.1sp1_243/quartus/linux64/quartus_sh --qinstall -qda "$x"; done

root@moose ~ # echo 'ATTR{idVendor}=="09fb", ATTR{idProduct}=="6001", MODE="666"' > /etc/udev/rules.d/altera-usb-blaster.rules

root@moose ~ # udevadm control --reload-rules

root@moose ~ # mkdir /etc/jtagd && touch /etc/jtagd

root@moose ~ # cp /usr/local/altera/12.1sp1_x64/quartus/linux64/pgm_parts.txt /etc/jtagd/jtagd.pgm_parts

root@moose ~ # LD_LIBRARY_PATH=/usr/local/altera/12.1sp1_243/quartus/linux64/ /usr/local/altera/12.1sp1_243/quartus/linux64/jtagconfig

1) USB-Blaster(Altera) [2-1.7]

020F10DD EP3C(10|5)/EP4CE(10|6)

You don't need to add startup scripts for jtagd, because any of the Quartus tools that talk to it first check that it's running, and start it if not. Here's my quick and dirty Makefile for building and programming:

PROJ=iq_modulator

TOPBLOCK=modulator

QUBIN=/usr/local/altera/12.1sp1_243/quartus/linux64

ALTERALIBS=/usr/local/altera/12.1sp1_243/quartus/linux64

all: synth fit assemble netlist

synth:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_map --read_settings_files=on --write_settings_files=off $(PROJ) -c $(TOPBLOCK)

fit:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_fit --read_settings_files=off --write_settings_files=off $(PROJ) -c $(TOPBLOCK)

assemble:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_asm --read_settings_files=off --write_settings_files=off $(PROJ) -c $(TOPBLOCK)

timing:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_sta $(PROJ) -c $(TOPBLOCK)

netlist:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_eda --read_settings_files=off --write_settings_files=off $(PROJ) -c $(TOPBLOCK)

prog:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_pgm --no_banner -c 1 -m JTAG -o 'P;output_files/$(TOPBLOCK).sof'

jtagconfig:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/jtagconfig

Remember to change the spaces to tabs in the Makefile definitions if you paste it in! Also, one known hassle is that you need to be root or use sudo for anything in the Makefile other than 'make jtagconfig'. When I get around to fixing that, I'll update it here. EDIT: duh, my fault. The problem was that my project directory had previous output owned by root from when I was testing, and the Quartus tools didn't know how to handle that error. Oops.

Finally, I got Quartus to run properly on my desktop. I'm even more pleased than I expected to be, because it works great without the ia32-libs package (32-bit libraries... while theoretically it shouldn't break anything, I've had wierd issues in the past with it.) There are lots of older docs on Google that describe this, but they are all old and still talk about the USBfs issue (Ubuntu dropped it from their default kernel, and it took Altera a while to switch to the new /sys/bus/usb/ system. Luckily for me however, it turns out it's quite simple now, albeit a little ghetto (output from the scripts removed for clarity):

root@moose /tmp # tar xfz /home/jackc/Downloads/12.1sp1_243_quartus_free_linux.tar.gz

root@moose /tmp # cd 12.1sp1_243_quartus_free_linux/linux_installer/quartus_free

root@moose /tmp/12.1sp1_243_quartus_free_linux/linux_installer/quartus_free # ./install --auto /usr/local/altera/12.1sp1_243

root@moose /tmp/12.1sp1_243_quartus_free_linux/linux_installer/quartus_free # cd ../quartus_free_64bit/

root@moose /tmp/12.1sp1_243_quartus_free_linux/linux_installer/quartus_free_64bit # ./install --auto /usr/local/altera/12.1sp1_243

root@moose /tmp/12.1sp1_243_quartus_free_linux/linux_installer/quartus_free_64bit # cd

root@moose ~ # for x in /tmp/12.1sp1_243_quartus_free_linux/devices/web/*.qda; do LD_LIBRARY_PATH=/usr/local/altera/12.1sp1_243/quartus/linux64 /usr/local/altera/12.1sp1_243/quartus/linux64/quartus_sh --qinstall -qda "$x"; done

root@moose ~ # echo 'ATTR{idVendor}=="09fb", ATTR{idProduct}=="6001", MODE="666"' > /etc/udev/rules.d/altera-usb-blaster.rules

root@moose ~ # udevadm control --reload-rules

root@moose ~ # mkdir /etc/jtagd && touch /etc/jtagd

root@moose ~ # cp /usr/local/altera/12.1sp1_x64/quartus/linux64/pgm_parts.txt /etc/jtagd/jtagd.pgm_parts

root@moose ~ # LD_LIBRARY_PATH=/usr/local/altera/12.1sp1_243/quartus/linux64/ /usr/local/altera/12.1sp1_243/quartus/linux64/jtagconfig

1) USB-Blaster(Altera) [2-1.7]

020F10DD EP3C(10|5)/EP4CE(10|6)

You don't need to add startup scripts for jtagd, because any of the Quartus tools that talk to it first check that it's running, and start it if not. Here's my quick and dirty Makefile for building and programming:

PROJ=iq_modulator

TOPBLOCK=modulator

QUBIN=/usr/local/altera/12.1sp1_243/quartus/linux64

ALTERALIBS=/usr/local/altera/12.1sp1_243/quartus/linux64

all: synth fit assemble netlist

synth:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_map --read_settings_files=on --write_settings_files=off $(PROJ) -c $(TOPBLOCK)

fit:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_fit --read_settings_files=off --write_settings_files=off $(PROJ) -c $(TOPBLOCK)

assemble:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_asm --read_settings_files=off --write_settings_files=off $(PROJ) -c $(TOPBLOCK)

timing:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_sta $(PROJ) -c $(TOPBLOCK)

netlist:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_eda --read_settings_files=off --write_settings_files=off $(PROJ) -c $(TOPBLOCK)

prog:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/quartus_pgm --no_banner -c 1 -m JTAG -o 'P;output_files/$(TOPBLOCK).sof'

jtagconfig:

LD_LIBRARY_PATH=$(ALTERALIBS) $(QUBIN)/jtagconfig

Remember to change the spaces to tabs in the Makefile definitions if you paste it in! Also, one known hassle is that you need to be root or use sudo for anything in the Makefile other than 'make jtagconfig'. When I get around to fixing that, I'll update it here. EDIT: duh, my fault. The problem was that my project directory had previous output owned by root from when I was testing, and the Quartus tools didn't know how to handle that error. Oops.

Sunday, January 6. 2013

Dual DAC I+Q Generation and Carrier Modulation

I wanted a simple, easily-interfacable QAM source to test some code I had written for the RTL-SDR, so I put together two small boards: one with two SPI DACs to generate the I and Q inputs, and one board with a single analog QAM modulator on it that takes the carrier, I, and Q signals and outputs the modulated carrier.

First, I made the dual DAC board with two MAX515's - simple, 10-bit, SPI DACs.

Aesthetically the board was not stunning - I used too large a drill for the through holes, and thus my pads were blown out. However, it was salavgable, so I continued putting it together and testing it by bit-banging the DACs with an FT245R dev board:

With that working well, I dead-bugged the modulator, a TRF370417 that will handle carrier freqs of 50 MHz through 6 GHz, to another board:

As pretty as that board turned out, it was unfortunately (and predictably) extremely noisy. Oh well, I suppose I will just have to make a proper board for all 3 chips...

First, I made the dual DAC board with two MAX515's - simple, 10-bit, SPI DACs.

Aesthetically the board was not stunning - I used too large a drill for the through holes, and thus my pads were blown out. However, it was salavgable, so I continued putting it together and testing it by bit-banging the DACs with an FT245R dev board:

With that working well, I dead-bugged the modulator, a TRF370417 that will handle carrier freqs of 50 MHz through 6 GHz, to another board:

As pretty as that board turned out, it was unfortunately (and predictably) extremely noisy. Oh well, I suppose I will just have to make a proper board for all 3 chips...

Wednesday, October 24. 2012

FSK Demod and Clock Recovery

I've been working on coding some FSK demodulation and clock recovery in C, since GNUradio's documentation is terrible. My target bitstream is the control channel on the local Motorola Smartzone IIi system, which is 3600 baud. Ideally the code, when finished, will log calls and group associations to make pretty graphs (the system I have doing this currently is a terribly ghetto mess of Windows programs from 1998 and perl.)

The first thing I did was record samples of the stream in two ways: from the soundcard connected to the discriminator tap of my scanner, and from my RTL-SDR dongle. I normalized both outputs into unsigned chars (uint8_t) for simplicity, then coded a quick histogram to make sure my two FSK levels were easily visible. It turns out they are!

8: ######

16: ########################

24: #######################################################

32: ###########################################################################################

40: ###################################################################################################

48: ########################################

56: #############

64: ###########

72: ##########

80: #########

88: #########

96: ########

104: ########

112: ########

120: ########

128: #######

136: ########

144: ########

152: ########

160: ########

168: ########

176: #########

184: ##########

192: ###########

200: ###############

208: #############################

216: ###########################################################

224: #################################################################################

232: ###############################################################

240: #################################

248: ############

The labels on the left are the base value of the bin being graphed, ie "192" is the sum of all samples from 192 to 199, inclusive. As you can see, there are lots of samples at both ends of the plot and very few in the center, which shows that our signal is clean.

After I had confirmed that my samples were in fact sensible, I gave some thought to clock recovery. Since both my streams were recorded at 44.1khz in order to be soundcard-compatible, there are just over 12 samples per symbol. However, since the number isn't exactly 12 as well as local oscillator differences, one can't simply determine the center of one symbol then extrapolate ad infinium from there.

There are entire books written on clock recovery schemes, but suffice to say this problem is generally solved by a PLL that gets 'nudged' forward or backwards in time based on the rate of change of the signal when the symbol is sampled. However, it's generally advantageous to try the simplest solution first.

I hacked up a quick sliding window sampling function that simply tries all 12 available offsets on the given "chunk" of samples (about 1/10th of a second worth), and determines which offset gives the lowest mean signal error. When the chunksize is small enough that the clock error during that period is less than one symbol wide, all the bits are successfully recovered. The process repeats at the next chunk, and no one is the wiser. Clearly this method isn't fast, but I was able to write and test it in an hour.

I was able to dump the binary stream from the samples recorded, however it turns out the data is interleaved and I can't find a spec document (Smartzone is a closed standard.) Unfortunately, until I figure that out, I'm stuck.

The first thing I did was record samples of the stream in two ways: from the soundcard connected to the discriminator tap of my scanner, and from my RTL-SDR dongle. I normalized both outputs into unsigned chars (uint8_t) for simplicity, then coded a quick histogram to make sure my two FSK levels were easily visible. It turns out they are!

8: ######

16: ########################

24: #######################################################

32: ###########################################################################################

40: ###################################################################################################

48: ########################################

56: #############

64: ###########

72: ##########

80: #########

88: #########

96: ########

104: ########

112: ########

120: ########

128: #######

136: ########

144: ########

152: ########

160: ########

168: ########

176: #########

184: ##########

192: ###########

200: ###############

208: #############################

216: ###########################################################

224: #################################################################################

232: ###############################################################

240: #################################

248: ############

The labels on the left are the base value of the bin being graphed, ie "192" is the sum of all samples from 192 to 199, inclusive. As you can see, there are lots of samples at both ends of the plot and very few in the center, which shows that our signal is clean.

After I had confirmed that my samples were in fact sensible, I gave some thought to clock recovery. Since both my streams were recorded at 44.1khz in order to be soundcard-compatible, there are just over 12 samples per symbol. However, since the number isn't exactly 12 as well as local oscillator differences, one can't simply determine the center of one symbol then extrapolate ad infinium from there.

There are entire books written on clock recovery schemes, but suffice to say this problem is generally solved by a PLL that gets 'nudged' forward or backwards in time based on the rate of change of the signal when the symbol is sampled. However, it's generally advantageous to try the simplest solution first.

I hacked up a quick sliding window sampling function that simply tries all 12 available offsets on the given "chunk" of samples (about 1/10th of a second worth), and determines which offset gives the lowest mean signal error. When the chunksize is small enough that the clock error during that period is less than one symbol wide, all the bits are successfully recovered. The process repeats at the next chunk, and no one is the wiser. Clearly this method isn't fast, but I was able to write and test it in an hour.

I was able to dump the binary stream from the samples recorded, however it turns out the data is interleaved and I can't find a spec document (Smartzone is a closed standard.) Unfortunately, until I figure that out, I'm stuck.

Tuesday, August 26. 2008

On Handy Type of Virtualization

Over the past two weeks I’ve been doing some work with virtualization in the context of Virtual Private Server setups – one physical host machine houses several “nodes” that do the actual processing.There are really two types of setups in this field: shared kernel and and separate kernel.

In a strictly shared kernel setup (like FreeBSD), the root kernel simple creates a new process tree for its nodes, and marks each process with both a process id (pid) AND it’s node id. This is obviously quite efficient, however it lacks the ability to do some of the handy things a separate kernel system provides.

In a separate kernel system (like Xen on linux, or even VMware), much more ram is neccesary since separate copies of all the binaries are loaded into memory. However, that means you can have different kernel version in different setups for testing, as well as better control over “virtual” hardware presented to those node kernels (I am keanly reminded of the networking restrictions FreeBSD jails have, as shared kernel nodes).

Most real operating systems support one setup or the other, however there are a few libraries out there that run on several OSs to allow for both options. Here’s what I’ve found.

Linux - After installing the Xen package, linux supports separate kernel nodes quite well. Despite some of the inherent inefficiencies of such a system, this setup is widely used and provided by companies such as slicehost and linode.

FreeBSD - Out of the box, FreeBSD includes support for Jails. This concept was written in 1995 to basically extend the functionality of a chroot environment. However, there are quite a few shortcomings: each jail is IDENTIFIED by its IP address, rather than a jail id or something of that sort. As such, each jail can have only ONE IPv4 address, and no IPv6. Further, there is no resource control to limit a jail to memory usage, cpu usage, or disk space (see end of paragraph for hacks). Also, a jail cannot do complicated firewalling or tunnels, but that is inherent to a shared kernel system so it can’t be blamed solely on FreeBSD’s implementation. It’s a shame out of the box jails suck so much, since BSD in general is a great system. There are a few patches to provide more jail control, however none are in the current source tree.

Solaris - Supporting 5 different types of virualization, Solaris really takes the cake. Everything works perfectly to any level of configuration. That said, Solaris x86 really is a scary beast. Even if you manage to get it installed, your hardware may still crash once a month when it throw a particular hook. If you’re using Sparc hardware however, this is most certainly the way to do things. (update 2011: the x86 builds are much more solid now)

In any event, I’m working on getting the patches to FreeBSD installed for jail control, as well as getting some nicer Sparc hardware to use Solaris.

In a strictly shared kernel setup (like FreeBSD), the root kernel simple creates a new process tree for its nodes, and marks each process with both a process id (pid) AND it’s node id. This is obviously quite efficient, however it lacks the ability to do some of the handy things a separate kernel system provides.

In a separate kernel system (like Xen on linux, or even VMware), much more ram is neccesary since separate copies of all the binaries are loaded into memory. However, that means you can have different kernel version in different setups for testing, as well as better control over “virtual” hardware presented to those node kernels (I am keanly reminded of the networking restrictions FreeBSD jails have, as shared kernel nodes).

Most real operating systems support one setup or the other, however there are a few libraries out there that run on several OSs to allow for both options. Here’s what I’ve found.

Linux - After installing the Xen package, linux supports separate kernel nodes quite well. Despite some of the inherent inefficiencies of such a system, this setup is widely used and provided by companies such as slicehost and linode.

FreeBSD - Out of the box, FreeBSD includes support for Jails. This concept was written in 1995 to basically extend the functionality of a chroot environment. However, there are quite a few shortcomings: each jail is IDENTIFIED by its IP address, rather than a jail id or something of that sort. As such, each jail can have only ONE IPv4 address, and no IPv6. Further, there is no resource control to limit a jail to memory usage, cpu usage, or disk space (see end of paragraph for hacks). Also, a jail cannot do complicated firewalling or tunnels, but that is inherent to a shared kernel system so it can’t be blamed solely on FreeBSD’s implementation. It’s a shame out of the box jails suck so much, since BSD in general is a great system. There are a few patches to provide more jail control, however none are in the current source tree.

Solaris - Supporting 5 different types of virualization, Solaris really takes the cake. Everything works perfectly to any level of configuration. That said, Solaris x86 really is a scary beast. Even if you manage to get it installed, your hardware may still crash once a month when it throw a particular hook. If you’re using Sparc hardware however, this is most certainly the way to do things. (update 2011: the x86 builds are much more solid now)

In any event, I’m working on getting the patches to FreeBSD installed for jail control, as well as getting some nicer Sparc hardware to use Solaris.

Friday, November 24. 2006

MIT Splash '06

I taught two classes at Splash this year: Moderate Electronics: Breaking Things for Fun and Profit, and AI: Genetic Algorithms and Neural Networks. The former was simply lecture, however the latter has the following files available:

GAs and NNs Presentation (PPT)

xor.c - Single-file NN implementation to solve nonlinear problems (xor)

nn.tar.gz - Very simple NN lib used in the class

Feel free to get in touch with me with questions!

GAs and NNs Presentation (PPT)

xor.c - Single-file NN implementation to solve nonlinear problems (xor)

nn.tar.gz - Very simple NN lib used in the class

Feel free to get in touch with me with questions!

Saturday, October 7. 2006

Neural Nets, Genetic Algorithms, and Cellular Automata

I read a book about biological computing recently, which got me interested in neural nets, genetic algorithms, and cellular automata. Perhaps I'll write up some science about each of them soon, but for now here are a few test codes I wrote to play with this stuff.

cells.c: A quick cellular automata simulation to play with different edge conditions and such. The linked version has my setup that allows a colony to continue living:

neural-backprop_g.c: Flat neural net model with back propagation. The important stuff is in main() if you want to play with it. I plan to write a proper library with this soon, so look for that.

cells.c: A quick cellular automata simulation to play with different edge conditions and such. The linked version has my setup that allows a colony to continue living:

neural-backprop_g.c: Flat neural net model with back propagation. The important stuff is in main() if you want to play with it. I plan to write a proper library with this soon, so look for that.

Monday, February 13. 2006

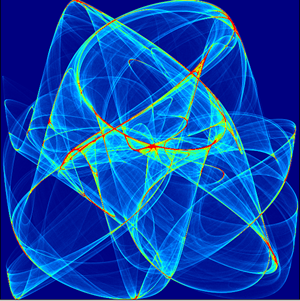

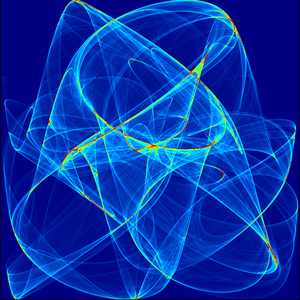

DCIR Renderer

Many people can go on and on about the “beauty of math”: everything fits, and that’s amazing. Personally, I think math is pretty handy, but not always that pretty. But hey, I suppose I proved myself wrong in this latest project.

DCIR is a program that renders pretty pictures based on a chaotic set of functions- the DeJong equations:

You can get the source here: single machine (dcir.c), distributed (dcir_d.c)

While this method is great, it’s outrageously slow. Thus, the name suggests, DCIR is going to be “distributed”, or cluster-ready. At the moment the single-cpu code works great, but the MPI version leaves something to be desired… It sucks about 80 Mb/s of bandwidth, then dies. (Hey, it LOOKS like it should work )

I would like to thank the developers of Fyre. Fyre is basically a better version of what I’ve made. We discussed originally how they had things set up, particularly how their clustering worked. Their version included some nice editing tools that DCIR doesn’t, as well as a built in visualizer, and an animation option. The reason I wrote my own was more of a personal challenge than a real reason, though it will be nice to have a version that runs on MPI, as opposed to running the Fyre rendering server on all the nodes of the cluster.

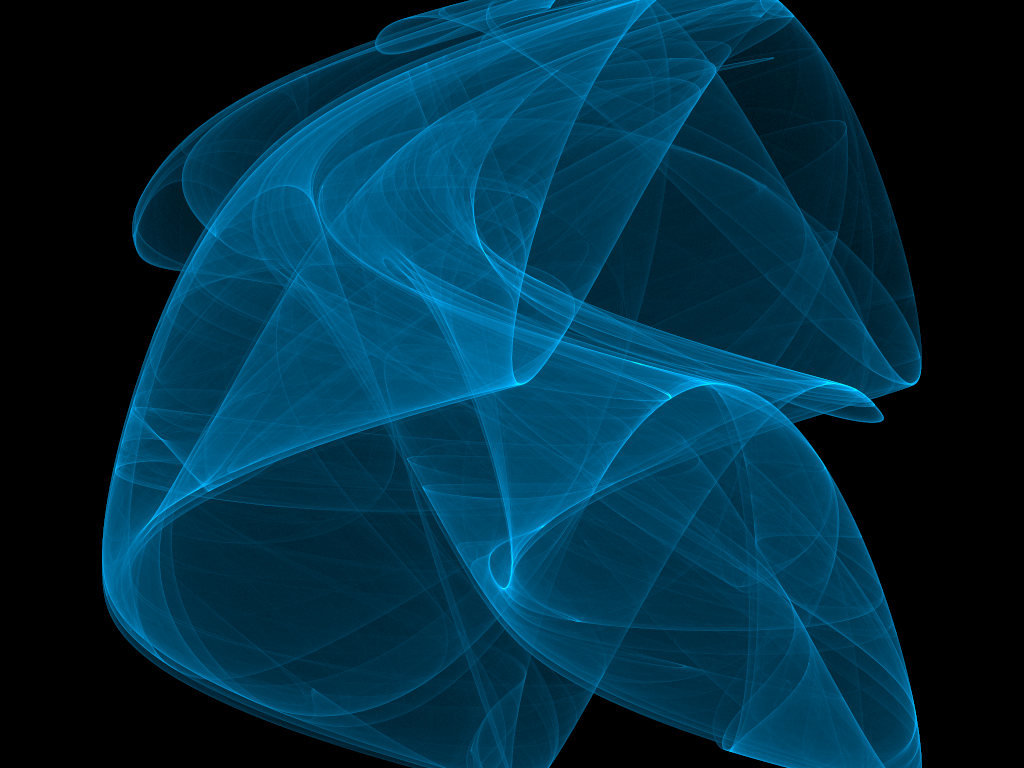

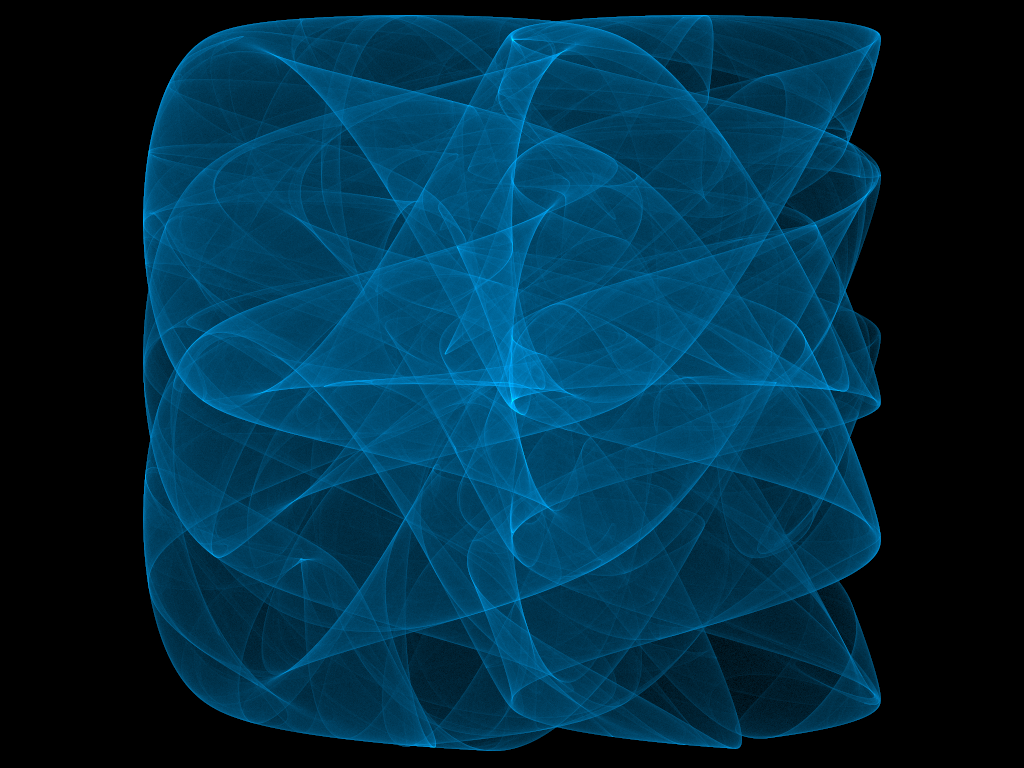

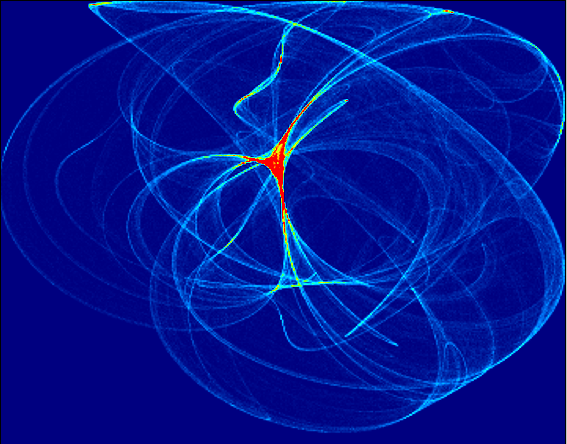

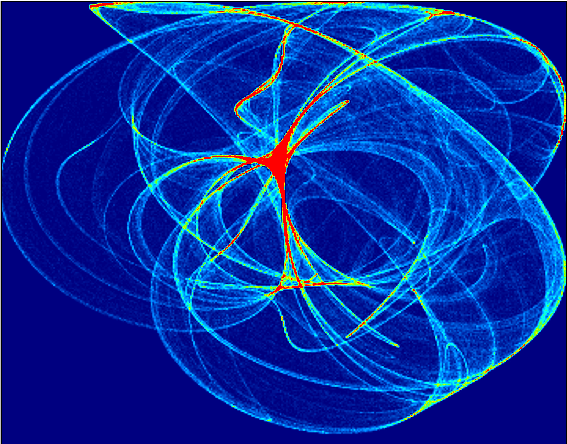

For reference, here are some old images from the first version of DCIR, as well as some nice example I’ve made with Fyre (the antialiasing sure is nice):

Trial 1 at different exposures:

Trial 2:

Fyre:

DCIR is a program that renders pretty pictures based on a chaotic set of functions- the DeJong equations:

x' = sin(ay) - cos(bx)Thus, starting with a random a,b,c,d,x, and y, we recursivly solve for an X’ and Y’ pair, then plug them back in while keeping a,b,c, and d constant. As opposed to your average function, such as f(x) = sin(x), these functions put out chaotic numbers. That is, they don’t follw any specific method, they simply hop around. But it turns out, they tend to hop into some areas more often than others. If you take a look at the images below, you’ll see what I mean. The brighter an area is, the more times an iteration “hopped” into that area. (Clicking on a picture will make it bigger, visualizations performed using Winfeild by Andrei Chernousov)

y' = sin(cx) - cos(dy)

You can get the source here: single machine (dcir.c), distributed (dcir_d.c)

While this method is great, it’s outrageously slow. Thus, the name suggests, DCIR is going to be “distributed”, or cluster-ready. At the moment the single-cpu code works great, but the MPI version leaves something to be desired… It sucks about 80 Mb/s of bandwidth, then dies. (Hey, it LOOKS like it should work )

I would like to thank the developers of Fyre. Fyre is basically a better version of what I’ve made. We discussed originally how they had things set up, particularly how their clustering worked. Their version included some nice editing tools that DCIR doesn’t, as well as a built in visualizer, and an animation option. The reason I wrote my own was more of a personal challenge than a real reason, though it will be nice to have a version that runs on MPI, as opposed to running the Fyre rendering server on all the nodes of the cluster.

For reference, here are some old images from the first version of DCIR, as well as some nice example I’ve made with Fyre (the antialiasing sure is nice):

Trial 1 at different exposures:

Trial 2:

Fyre:

Sunday, December 18. 2005

Low Altitude Temperature Profile

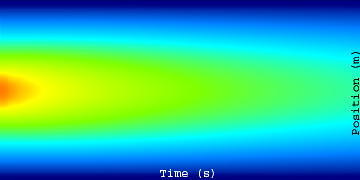

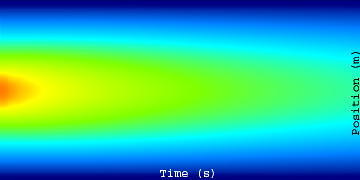

This project is to make a mathematical model of the temperature at low altitudes, and how it changes as the sun warms the earth. I’m basing the model around the one dimensional heat equation. Here's the output from the model with cold boundaries and a hot initial center, linearly distributed along the left wall (time is to the right):

The actual writing of the model was about as exciting as coding usually is… pretty boring to hear about. Thus, I’ll describe that process and how it works when I get a little more time, but at the moment here are the stories of testing the model using the rockets.

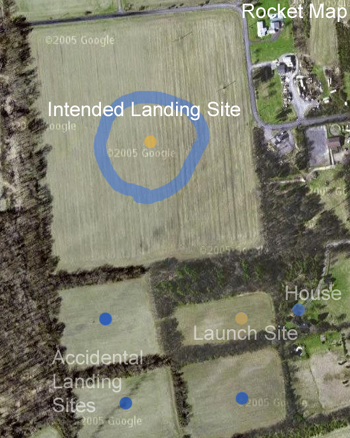

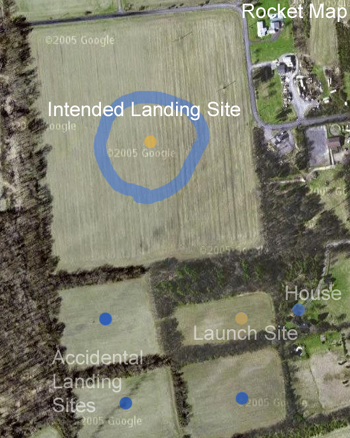

I started with 2 rockets, I currently have none. Thursday we went out and launched the first rocket without the computer in it to make sure it went where we wanted. It didn’t. In the past I’d used rockets with 4 motors and two stages, but separated, on either side of the body. As you can see here, this was not the case on the test rocket:

Here the bottom two motors were taped together… this turned out to be a bad idea. Apperently the motors do not burn at the same rate exactly, so when one finished and ignited its other stage, it was cast off… while still being attached to the other. Thus the bottom motor ripped off before it had lit its upper stage, leaving the rocket underpowered. Besides that, the launch rod we used in the test wasn’t nearly strong enoough, and the rocket ended up leaving the pad at about a 45-degree angle. This wasn’t so bad until the previously-explained stage switch, in which the sudden change in acceleration made the payload and thus the CG shift forward.This shifting mass caused the rocket to further rotate, and when the (single) upper stage motor fired, the rocket was pointed horizontally along the ground, about 200 feet up. The rocket continued missle-style for the last 3 seconds of the burn, then found its way into the trees at around 250 MPH. When we found it, there were peices strewn in a 25 ft radius of the actual payload.

The payload was intended to split from the body at the end of the burn. Unfourtunatly it got stuck, and the pressue inside the fusalage went instead out a different way: through the sides. So the payload came down with a section of fusalage, parachute still stuffed inside. As it went through the trees, the fins were stripped off. The wooden nose cone must have hit a tree, because I’ve never seen dents like that in such a large piece of wood…